Onboard Google Cloud Projects

This topic describes how to add Google Cloud projects to ACE Cloud Network Security.

You can choose four onboarding methods to add new Google Cloud projects. The first involves using scripts, while the others do not. The onboarding method you select also determines whether changes made to account resources after onboarding are automatically synced from Google Cloud Platform to your environment.

Onboarding Methods

Note: Depending on which onboarding method you choose, changes to onboarded account resources may be automatically synced every hour.

| Onboarding Method | Description | Automatic sync* |

|---|---|---|

| With script (via wizard) | Uses scripts to onboard Google Cloud resources | Yes |

| No script (via wizard) | Onboard Google Cloud resources without using scripts | Yes |

| API (single account) | Onboard a single Google Cloud via API | No |

| Terraform | Onboard Google Cloud resources using Terraform | Yes |

Notes:

-

To add Google Projects projects to ACE , you need Google Cloud service account credentials.

-

Onboarding means giving access to ACE to collect data from your Google Cloud projects. To stop data collection (delete your project), you must withdraw access to ACE by revoking the ACE permissions within your cloud vendor environment.

-

Project IDs must be unique across all organizations for the onboarding process to complete successfully.

-

Any changes to projects in an onboarded Google Cloud folder or organization will automatically sync with ACE once every hour.

-

Discovery of projects might take some time after initial onboarding, especially if done on the organization level.

Note: For more information about the GCP onboarding script, see Inside the Google Onboarding Script.

Before you start

To connect a Google Cloud account that is managed by an organization, make sure you are logged on to Google Cloud console.

Required permissions and roles

To onboard multiple projects in a Google Cloud account, make sure you have the following roles enabled:

-

To onboard projects under a folder:roles/resourcemanager.folderAdmin

-

To onboard projects under an organization:roles/resourcemanager.organizationAdmin

To learn more about the roles and APIs required by Cloud App Analyzer, see Permissions Required for Google Cloud Projects.

Onboarding Google Cloud Projects

Onboard Google Cloud resources using your preferred method:

Do the following:

-

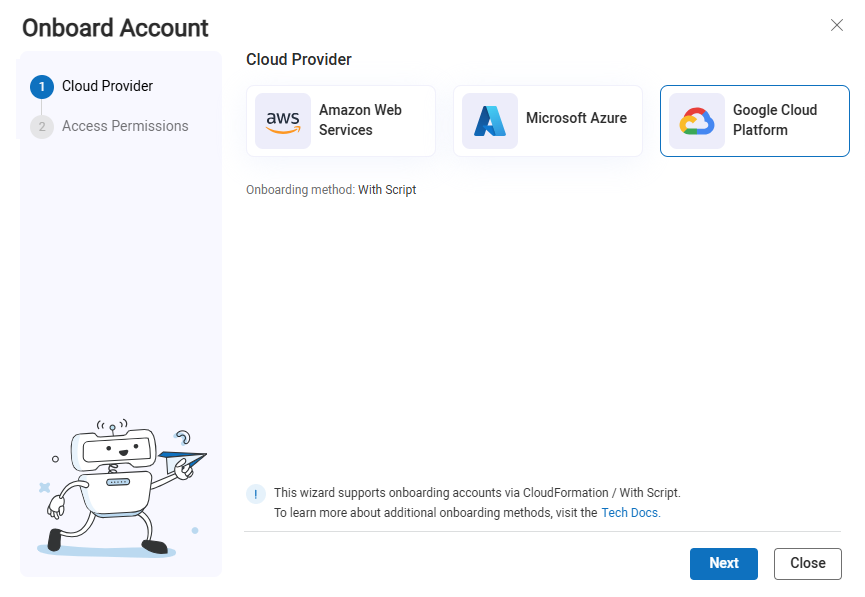

In the ACE

Settings area, click

Settings area, click  ONBOARDING.

ONBOARDING.On the Onboarding Management page that opens, click +Onboard Accounts. The Onboard Account Cloud Provider selection page appears.

-

Click the

Google Cloud Platform button and click Next.

Google Cloud Platform button and click Next.The Google Access Permissions step appears..

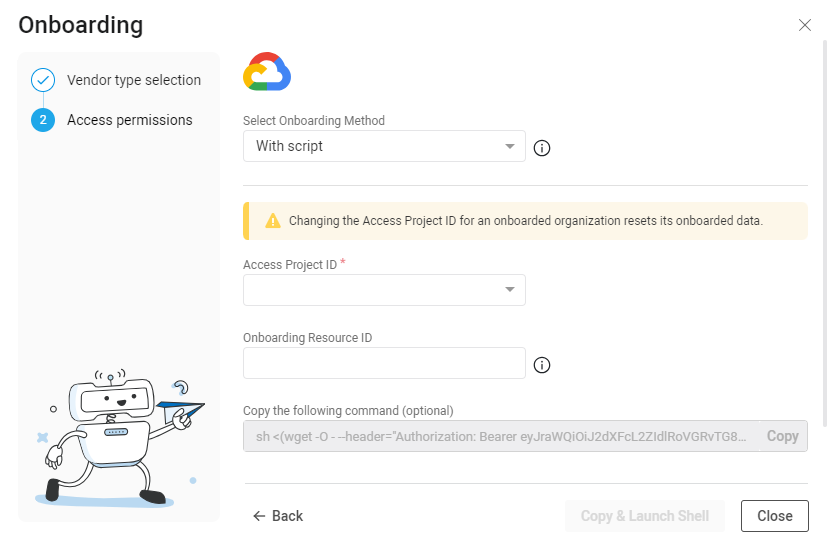

-

In the Access Project ID field, input an Access Project ID using one of the following methods:

-

From the dropdown: Select an existing Google Cloud Project ID to grant access to the Google Cloud resource. ACE will have access to the previous resources and the new resource.

-

Enter a new Access Project ID into the field: The project is used to establish access to the Google Cloud resources.

Warning: Changing the Access Project ID for an already onboarded organization resets its onboarded data.

-

-

(Optional) In the Onboarding resource ID field, enter the ID of the project, folder, or organization root to onboard.

Note: When this field is left blank, ACE will onboard the project you entered in the Access project ID field.

-

Complete the onboarding using one of the following methods:

-

To open a Cloud Shell session directly from the ACE interface:

-

Click Copy & Launch Shell.

The Cloud Shell command is automatically copied into the system memory and the browser opens a new tab with the Google Cloud Shell displayed.

Important: Before clicking Copy & Launch Shell, make sure no Google Cloud Shell terminals are already open to avoid "Billing not found" errors.

-

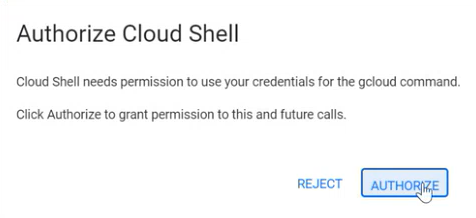

Paste the Cloud Shell command from system memory (Ctrl-Shift-V) and press Enter.

The Authorize Cloud Shell confirmation window appears.

-

Click AUTHORIZE.

An onboarding script runs in the Cloud Shell. This script creates an access project and automates all the steps necessary to onboard the resource(s) to ACE.

-

When the script finishes, press Ctrl-D to close the terminal.

The ACE Onboarding Management page displays the newly onboarded resources.

Note: It may take up to an hour for Google Cloud to sync with ACE.

-

-

(Alternative method) If you don't want to open a Cloud Shell session directly from the wizard, you can run bash locally using a proxy:

-

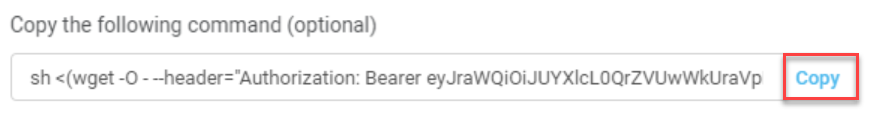

Click Copy to copy the Cloud Shell command.

Note: The command generates an unreadable script. Expand the example below to see the readable version of the script:

Example of the script

Copy

Example of the script

Copy#!/bin/bash

#Algosec Cloud tenantId

ALGOSEC_TENANT_ID='<ALGOSEC_TENANT_ID>'

#Algosec Cloud onboarding URL

ALGOSEC_CLOUD_HOST='https://<HOST>'

ALGOSEC_CLOUD_ONBOARDING_URL="$ALGOSEC_CLOUD_HOST<ONBOARDING_PATH>"

# Token

TOKEN='<ONBOARDING_TOKEN>'

ADDITIONALS='<ALGOSEC_ADDITIONALS>'

ENV='<ENVIRONMENT>'

# Define a working project where a service account would be created

PROJECT_ID='<GCP_PROJECT_ID>'

PROJECT_ID=($(echo $PROJECT_ID | tr -d '\n'))

# Define a target project/folder/organization would be onboarded into Algosec Cloud

TARGET_RESOURCE='<GCP_TARGET_RESOURCE>'

TARGET_RESOURCE=($(echo $TARGET_RESOURCE | tr -d '\n'))

# Service account definitions

SERVICE_ACCOUNT_NAME="algosec-cloud-sa-$ENV"

SERVICE_ACCOUNT_DISPLAY_NAME="Algosec Cloud SA"

SERVICE_ACCOUNT_DESCRIPTION="Service account for Algosec Cloud"

SERVICE_ACCOUNT_EMAIL=$SERVICE_ACCOUNT_NAME@$PROJECT_ID.iam.gserviceaccount.com

# Define associate array for onboarded organization:service-accounts list

declare -A ONBOARDED_ORGS_SERVICE_ACCOUNTS=( <GCP_ONBOARDED_ORGS_SERVICE_ACCOUNTS> )

# Define function to bind resouce to a service-account

add_resource_access_to_service_account(){

local resource=$1

local target_resource=$2

local service_account_email=$3

local organization_id=$4

#----------------------------------------------------

# Create custom role if it doesn't exist

local custom_role=organizations/$organization_id/roles/inheritedPolicyACViewer

gcloud iam roles describe inheritedPolicyACViewer --organization=$organization_id 2>/dev/null

if [ $? -ne 0 ]; then

echo "Creating custom role inheritedPolicyACViewer"

gcloud iam roles create inheritedPolicyACViewer --organization=$organization_id \

--title="Inherited Policy Viewer Algosec Cloud" --description="Inherited Viewer Roles for Algosec Cloud" \

--permissions="essentialcontacts.contacts.list,essentialcontacts.contacts.get,compute.firewallPolicies.list,resourcemanager.folders.get,resourcemanager.organizations.get,storage.buckets.list" --stage=ALPHA 1>/dev/null

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while creating organization role — please ensure you have the required permissions"

exit 1

fi

fi

# Assign custom role to service-account at org level

echo "Assigning [$custom_role] role to service-account email [$service_account_email] at organization level [$organization_id]"

eval $(echo "gcloud organizations add-iam-policy-binding $organization_id --member=serviceAccount:$service_account_email --role=$custom_role 1>/dev/null")

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while adding roles — please ensure you have the required permissions"

exit 1

fi

#----------------------------------------------------

# Assign required roles for target resource to service-account

roles=( <SERVICE_ACCOUNT_ROLES> )

for role in ${roles[@]}; do

echo "Adding a role to the IAM policy of the [${resource%s} $target_resource]: [roles/$role]"

eval $(echo "gcloud $resource add-iam-policy-binding $target_resource --member=serviceAccount:$service_account_email --role=roles/$role 1>/dev/null")

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while adding roles — please ensure you have the required permissions"

exit 1

fi

done

}

# Verify billing account

IFS=$'\n' BILLING_ACCOUNTS=($(gcloud alpha billing accounts list --filter=open=true --format json 2>/dev/null | jq -r '.[].name'))

if [ ${#BILLING_ACCOUNTS[@]} -eq 0 ]; then

echo "ERROR: No billing account found"

echo "Billing must be enabled for activation of some of the services used by Algosec Cloud"

exit 1

fi

# Find target resource type and organization

ORGANIZATION_IDS=($(gcloud organizations list --format json | jq -r '.[].name' | cut -d'/' -f2))

if [ ${#ORGANIZATION_IDS[@]} -eq 0 ]; then

echo "ERROR: No organizations found"

echo "Algosec Cloud supports only the Google Workspace and Cloud Identity accounts that have access to additional features of the Google Cloud resource hierarchy, such as organization and folder resources."

exit 1

else

for orgId in "${ORGANIZATION_IDS[@]}"; do

if [[ $orgId == $TARGET_RESOURCE ]]; then

ORGANIZATION_ID=$orgId

RESOURCE="organizations"

RESOURCE_TYPE="organization"

TARGET="$RESOURCE_TYPE $TARGET_RESOURCE"

echo "Target resource found: Organization Id [$ORGANIZATION_ID]"

break

fi

done

fi

# In case target resource isn't an organization

if [ -z "$TARGET" ];then

gcloud resource-manager folders describe $TARGET_RESOURCE 2>/dev/null

if [ $? -eq 0 ]; then

RESOURCE="resource-manager folders"

RESOURCE_TYPE="folder"

TARGET="$RESOURCE_TYPE $TARGET_RESOURCE"

echo "Target resource found: Folder [$TARGET_RESOURCE]"

if [ -z ${ORGANIZATION_ID} ];then

ORGANIZATION_ID=$(gcloud resource-manager folders get-ancestors-iam-policy $TARGET_RESOURCE --format="json(id,type)" | jq -r '.[] | select(.type == "organization") | .id')

echo "Organization Id [$ORGANIZATION_ID] found for Folder Id [$TARGET_RESOURCE]"

fi

else

# In case target resource isn't a folder

gcloud projects describe $TARGET_RESOURCE 2>/dev/null

if [ $? -eq 0 ]; then

RESOURCE="projects"

RESOURCE_TYPE="project"

TARGET="$RESOURCE_TYPE $TARGET_RESOURCE"

echo "Target resource found: Project [$TARGET_RESOURCE]"

if [ -z ${ORGANIZATION_ID} ];then

ORGANIZATION_ID=$(gcloud projects get-ancestors $TARGET_RESOURCE --format="json(id,type)" | jq -r '.[] | select(.type == "organization") | .id')

echo "Organization Id [$ORGANIZATION_ID] found for Project Id [$TARGET_RESOURCE]"

fi

else

echo "ERROR: The target resource [$TARGET_RESOURCE] wasn't found or the user has no permission to work with it"

echo "The onboarding process has failed — please ensure you have the required permissions"

exit 1

fi

fi

fi

# Find organization for access project id

ACCESS_ORGANIZATION_ID=$(gcloud projects get-ancestors $PROJECT_ID --format="json(id,type)" | jq -r '.[] | select(.type == "organization") | .id')

if [ -z ${ACCESS_ORGANIZATION_ID} ];then

echo "ERROR: Failed to retrieve organization id for the given access project id — please ensure you have the required permissions"

exit 1

fi

# Verify project id

gcloud projects describe $PROJECT_ID 2>/dev/null

if [ $? -ne 0 ]; then

echo "ERROR: Cannot find project with ID $PROJECT_ID"

exit 1

fi

# Verify project service-accounts

gcloud config set project $PROJECT_ID

eval $(echo "gcloud iam service-accounts list --project $PROJECT_ID --format json 1>/dev/null")

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while listing service-accounts — please ensure you have the required permissions"

exit 1

fi

# Update existing Algosec Cloud service-account of project or delete if service-account is from another project in the organization

echo "Finding existing service-accounts for Algosec Cloud"

# Check if access organization is already known

if [[ -v ONBOARDED_ORGS_SERVICE_ACCOUNTS["$ACCESS_ORGANIZATION_ID"] ]]; then

ONBOARDED_SA=${ONBOARDED_ORGS_SERVICE_ACCOUNTS["$ACCESS_ORGANIZATION_ID"]}

# Update service-account if it already exists

if [[ "$ONBOARDED_SA" == "$SERVICE_ACCOUNT_EMAIL" ]]; then

echo "Algosec Cloud service-account $ONBOARDED_SA for access project id $PROJECT_ID already exists"

echo "Binding service-account: $ONBOARDED_SA to resource: ${RESOURCE%s} $TARGET_RESOURCE"

add_resource_access_to_service_account $RESOURCE $TARGET_RESOURCE $ONBOARDED_SA $ORGANIZATION_ID

echo "Success: ${RESOURCE%s} $TARGET_RESOURCE onboarded to Algosec Cloud successfully. The onboarding process may take up to several hours."

exit 0

else

# Delete access organization service-account from another project

# Extract project id from SA email

SA_PROJECT_ID="${ONBOARDED_SA#*@}"

SA_PROJECT_ID="${SA_PROJECT_ID%%.*}"

gcloud iam service-accounts list --project $SA_PROJECT_ID --format="value(email)" | grep $ONBOARDED_SA

if [ $? -eq 0 ]; then

echo "Deleting old service-account [$ONBOARDED_SA]"

gcloud config set project $SA_PROJECT_ID

gcloud iam service-accounts delete $ONBOARDED_SA --quiet

fi

fi

else

echo "No existing service-account found for ${ACCESS_ORGANIZATION_ID}"

fi

echo "Preparing to onboard the target resource [$TARGET]"

# Service-account creation

# Delete service-account if it already exists before creating

gcloud config set project $PROJECT_ID

gcloud iam service-accounts list --project $PROJECT_ID --format="value(email)" | grep $SERVICE_ACCOUNT_EMAIL

if [ $? -eq 0 ]; then

echo "Algosec Cloud service-account already exists ${SERVICE_ACCOUNT_EMAIL}. Deleting it."

gcloud iam service-accounts delete $SERVICE_ACCOUNT_EMAIL --quiet

sleep 3

fi

# Create service-account

echo "Creating service account [$SERVICE_ACCOUNT_NAME]"

gcloud iam service-accounts create $SERVICE_ACCOUNT_NAME --description="$SERVICE_ACCOUNT_DESCRIPTION" --display-name="$SERVICE_ACCOUNT_DISPLAY_NAME" 1>/dev/null

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while creating service-account — please ensure you have the required permissions"

exit 1

fi

sleep 3

# Verify service account was created

gcloud iam service-accounts describe "$SERVICE_ACCOUNT_EMAIL" 1>/dev/null

if [ $? -ne 0 ]; then

echo "ERROR: Service account does not exist after creation attempt."

exit 1

fi

# Create service-account key

echo "Creating a key for the service account [$SERVICE_ACCOUNT_NAME]"

rm -f $SERVICE_ACCOUNT_NAME.json

gcloud iam service-accounts keys create "$SERVICE_ACCOUNT_NAME.json" --iam-account=$SERVICE_ACCOUNT_EMAIL 1>/dev/null

if [ $? -ne 0 ]; then

echo "ERROR: The onboarding process has failed while creating key — please ensure you have the required permissions"

exit 1

fi

SERVICE_ACCOUNT_KEY=$(cat $SERVICE_ACCOUNT_NAME.json | base64 | tr -d \\n )

# Add roles to resource for service-account

add_resource_access_to_service_account $RESOURCE $TARGET_RESOURCE $SERVICE_ACCOUNT_EMAIL $ORGANIZATION_ID

#----------------------------------------------------

#GCP region

REGION='<GCP_REGION>'

#Prevasio host

PREVASIO_HOST='<PREVASIO_HOST>'

#Prevasio source code location

SOURCES_URL='<PREVASIO_SOURCES_URL>'

PROJECT_IDS=()

HASH=$(echo -n "$ALGOSEC_TENANT_ID" | cut -c1-5)

get_project_ids() {

local resource_type=$1

local resource_id=$2

if [[ "$resource_type" == "project" ]]; then

PROJECT_IDS+=("$resource_id")

else

echo "Fetching projects for $resource_type $resource_id"

projects=($(gcloud projects list --format="value(projectId)"))

for curr_project in "${projects[@]}" ; do

ancestor=$(gcloud projects get-ancestors "$curr_project" --format json | jq ".[] | select(.type == \"$resource_type\" and .id == \"$resource_id\")")

if [[ -n "$ancestor" ]]; then

PROJECT_IDS+=("$curr_project")

fi

done

fi

}

create_application() {

PROJECT_NUMBER=$(gcloud projects describe "${1}" --format="value(projectNumber)")

echo "Creating secrets [prevasio-$HASH-auth-token, prevasio-$HASH-url, prevasio-$HASH-api-key] in project $1"

printf "${3}" | gcloud secrets create prevasio-$HASH-org-id --data-file=- --project=$1 --quiet --verbosity=error > /dev/null

gcloud secrets add-iam-policy-binding prevasio-$HASH-org-id --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/secretmanager.secretAccessor" --quiet --verbosity=error

printf "${PREVASIO_HOST}" | gcloud secrets create prevasio-$HASH-host --data-file=- --project=$1 --quiet --verbosity=error > /dev/null

gcloud secrets add-iam-policy-binding prevasio-$HASH-host --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/secretmanager.secretAccessor" --quiet --verbosity=error

printf "${ADDITIONALS}" | gcloud secrets create prevasio-$HASH-additionals --data-file=- --project=$1 --quiet --verbosity=error > /dev/null

gcloud secrets add-iam-policy-binding prevasio-$HASH-additionals --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/secretmanager.secretAccessor" --quiet --verbosity=error

printf "${ALGOSEC_CLOUD_HOST}" | gcloud secrets create prevasio-$HASH-algosec-cloud-host --data-file=- --project=$1 --quiet --verbosity=error > /dev/null

gcloud secrets add-iam-policy-binding prevasio-$HASH-algosec-cloud-host --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/secretmanager.secretAccessor" --quiet --verbosity=error

echo "Deploying cloud function prevasio-$HASH-events-forwarder in project $1"

gcloud functions deploy prevasio-$HASH-events-forwarder --gen2 --set-env-vars=HASH=$HASH --set-secrets=PREVASIO_HOST=prevasio-$HASH-host:1,PREVASIO_ADDITIONALS=prevasio-$HASH-additionals:1,ORGANIZATION_ID=prevasio-$HASH-org-id:1,ALGOSEC_CLOUD_HOST=prevasio-$HASH-algosec-cloud-host:1 --region=$2 --runtime=python310 --source=./function/events_forwarder --entry-point=forward_func --trigger-http --no-allow-unauthenticated --max-instances=1 --memory=128Mi --project=$1 --quiet --verbosity=error > /dev/null

echo "Deploying cloud function prevasio-$HASH-cloud-run-scanner in project $1"

gcloud functions deploy prevasio-$HASH-cloud-run-scanner --gen2 --region=$2 --runtime=python310 --source=./function/cloud_run_scanner --entry-point=scan_func --trigger-http --no-allow-unauthenticated --max-instances=1 --memory=256Mi --project=$1 --quiet --verbosity=error > /dev/null

gcloud scheduler jobs create http prevasio-$HASH-cloud-run-scanner-scheduler --schedule="0 */6 * * *" --uri="https://$2-$1.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner" --location=$2 --oidc-service-account-email=$PROJECT_NUMBER[email protected]

echo "Enabling Binary Authorization for Cloud Run services and jobs"

curl -X POST -H "Authorization: Bearer $(gcloud auth print-identity-token)" -s -o /dev/null "https://$2-$1.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner"

gcloud pubsub topics create prevasio-$HASH-images-to-sign --project=$1 --quiet --verbosity=error > /dev/null

gcloud pubsub topics add-iam-policy-binding prevasio-$HASH-images-to-sign --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/pubsub.publisher" --quiet --verbosity=error

echo "Deploying cloud function prevasio-$HASH-image-attestation-creator in project $1"

gcloud functions deploy prevasio-$HASH-image-attestation-creator --gen2 --set-env-vars=HASH=$HASH --region=$2 --runtime=python310 --source=./function/image_attestation_creator --entry-point=creator_func --trigger-http --no-allow-unauthenticated --max-instances=10 --memory=128Mi --project=$1 --quiet --verbosity=error > /dev/null

gcloud pubsub subscriptions create prevasio-$HASH-image-attestation-creator-subscription --topic=projects/$1/topics/prevasio-$HASH-images-to-sign --expiration-period=never --push-endpoint=https://$2-$1.cloudfunctions.net/prevasio-$HASH-image-attestation-creator --push-auth-service-account=$PROJECT_NUMBER[email protected] --ack-deadline=65 --message-retention-duration=10m --project=$1 --quiet --verbosity=error > /dev/null

IMAGE_BATCH=()

for current_region in $(gcloud compute regions list --format="value(name)" --project=${1} --quiet 2>/dev/null); do

for current_repository in $(gcloud artifacts repositories list --location=${current_region} --project=${1} --format="value(REPOSITORY)" 2>/dev/null); do

for current_image in $(gcloud artifacts docker images list ${current_region}-docker.pkg.dev/${1}/${current_repository} --project=${1} --format="value[separator='@'](IMAGE,DIGEST)" 2>/dev/null); do

IMAGE_BATCH+=($current_image)

if [[ "${#IMAGE_BATCH[@]}" -ge 10 ]] ; then

gcloud pubsub topics publish prevasio-$HASH-images-to-sign --message="$(IFS=, ; echo "${IMAGE_BATCH[*]}")" --project=${1} > /dev/null

IMAGE_BATCH=()

fi

done

done

done

if [[ "${#IMAGE_BATCH[@]}" -gt 0 ]] ; then

gcloud pubsub topics publish prevasio-$HASH-images-to-sign --message="$(IFS=, ; echo "${IMAGE_BATCH[*]}")" --project=${1} > /dev/null

fi

should_create_topic=true

for topic in $(gcloud pubsub topics list --format='value(name)' --project=${1}); do

if [[ "$topic" == */gcr ]] ; then

should_create_topic=false

fi

done

if $should_create_topic ; then

echo "Creating gcr topic to get Artifact Registry events in project $1"

gcloud pubsub topics create gcr --project=$1 --quiet --verbosity=error > /dev/null

fi

echo "Creating subscription prevasio-$HASH-event-subscription in project $1"

gcloud pubsub subscriptions create prevasio-$HASH-event-subscription --topic=projects/$1/topics/gcr --expiration-period=never --push-endpoint=https://$2-$1.cloudfunctions.net/prevasio-$HASH-events-forwarder --push-auth-service-account=$PROJECT_NUMBER[email protected] --ack-deadline=65 --message-retention-duration=10m --project=$1 --quiet --verbosity=error > /dev/null

}

create_attestor() {

NOTE_ID="prevasio-$HASH-note"

ATTESTOR_ID="prevasio-$HASH-attestor"

PROJECT_NUMBER=$(gcloud projects describe "${1}" --format="value(projectNumber)")

echo "Creating prevasio-$HASH-attestor in project $1"

echo "{

'attestation': {

'hint': {

'human_readable_name': 'Prevasio attestation authority'

}

}

}" > ./attestor_note.json

curl -X POST -H "Content-Type: application/json" -H "Authorization: Bearer $(gcloud auth print-access-token)" --data-binary @./attestor_note.json -s -o /dev/null "https://containeranalysis.googleapis.com/v1/projects/${1}/notes/?noteId=${NOTE_ID}"

rm -rf ./attestor_note.json

gcloud container binauthz attestors create $ATTESTOR_ID --attestation-authority-note=$NOTE_ID --attestation-authority-note-project=$1 --project=$1 --quiet --verbosity=error

echo "Adding Prevasio IAM role for prevasio-$HASH-attestor in project $1"

BINAUTHZ_SA_EMAIL="service-${PROJECT_NUMBER}@gcp-sa-binaryauthorization.iam.gserviceaccount.com"

echo "{

'resource': 'projects/${1}/notes/${NOTE_ID}',

'policy': {

'bindings': [

{

'role': 'roles/containeranalysis.notes.occurrences.viewer',

'members': [

'serviceAccount:${BINAUTHZ_SA_EMAIL}'

]

}

]

}

}" > ./iam_request.json

curl -X POST -H "Content-Type: application/json" -H "Authorization: Bearer $(gcloud auth print-access-token)" --data-binary @./iam_request.json -s -o /dev/null "https://containeranalysis.googleapis.com/v1/projects/${1}/notes/${NOTE_ID}:setIamPolicy"

rm -rf ./iam_request.json

echo "Adding Prevasio KMS key for prevasio-$HASH-attestor in project $1"

gcloud kms keyrings create prevasio-attestor-keyring --location=global --verbosity=critical --project=$1 --quiet > /dev/null

gcloud kms keys create prevasio-attestor-key --keyring=prevasio-attestor-keyring --location=global --purpose=asymmetric-signing --default-algorithm=ec-sign-p256-sha256 --verbosity=critical --project=$1 --quiet > /dev/null

gcloud kms keys add-iam-policy-binding prevasio-attestor-key --member="serviceAccount:${PROJECT_NUMBER}[email protected]" --role="roles/cloudkms.signer" --location=global --keyring=prevasio-attestor-keyring --quiet --verbosity=error > /dev/null

gcloud beta container binauthz attestors public-keys add --attestor=$ATTESTOR_ID --keyversion-project=$1 --keyversion-location=global --keyversion-keyring=prevasio-attestor-keyring --keyversion-key=prevasio-attestor-key --keyversion=1 --project=$1 --quiet --verbosity=error > /dev/null

if $IMAGE_LOCKING_ENABLED ; then

echo "Setting prevasio-$HASH-attestor to the binary auth policy in project $1"

gcloud container binauthz policy export > ./policy.yaml --project=$1 --quiet --verbosity=error

ATTESTORS=()

for exported_attestor in $(awk '$1 == "-"{ if (key == "requireAttestationsBy:") print $NF; next } {key=$1}' ./policy.yaml); do

ATTESTORS+=($exported_attestor)

done

ATTESTORS+=(projects/$1/attestors/$ATTESTOR_ID)

{

printf 'defaultAdmissionRule:

'

printf ' enforcementMode: ENFORCED_BLOCK_AND_AUDIT_LOG

'

printf ' evaluationMode: REQUIRE_ATTESTATION

'

printf ' requireAttestationsBy:'

for attestor in "${ATTESTORS[@]}"; do

printf '

- %s' "$attestor"

done

printf '

globalPolicyEvaluationMode: ENABLE'

} > ./updated_policy.yaml

gcloud container binauthz policy import ./updated_policy.yaml --project=$1 --quiet --verbosity=error > /dev/null

rm -rf ./policy.yaml

rm -rf ./updated_policy.yaml

fi

}

create_project_resources() {

create_attestor $1

create_application $1 $REGION $2

}

mkdir -p prevasio-onboarding && rm -rf prevasio-onboarding/*

cd prevasio-onboarding

echo "Downloading Prevasio application resources"

wget -O sources.zip "${SOURCES_URL}?tenant_id=${ALGOSEC_TENANT_ID}"

unzip sources.zip

get_project_ids "$RESOURCE_TYPE" "$TARGET_RESOURCE"

for project in "${PROJECT_IDS[@]}" ; do

echo "Setting current project to [$project]"

gcloud config set project "$project"

echo "Checking mandatory APIs for [$project]"

MANDATORY_APIS=(artifactregistry.googleapis.com cloudfunctions.googleapis.com cloudkms.googleapis.com cloudscheduler.googleapis.com compute.googleapis.com container.googleapis.com pubsub.googleapis.com secretmanager.googleapis.com binaryauthorization.googleapis.com cloudbuild.googleapis.com containeranalysis.googleapis.com run.googleapis.com)

ENABLED_APIS=()

for enabled_api in $(gcloud services list --enabled --project ${project} --format="value(config.name)"); do

ENABLED_APIS+=("$enabled_api")

done

SHOULD_EXIT=false

for mandatory_api in "${MANDATORY_APIS[@]}"; do

API_NOT_PRESENTED=true

for enabled_api in "${ENABLED_APIS[@]}"; do

if [[ "$mandatory_api" == "$enabled_api" ]] ; then

API_NOT_PRESENTED=false

fi

done

if $API_NOT_PRESENTED ; then

echo "Mandatory API $mandatory_api is not enabled. Please, enable it and try to onboard one more time"

SHOULD_EXIT=true

fi

done

if $SHOULD_EXIT ; then

continue

fi

gcloud iam roles create algosec.gke.custom.role \

--title="Algosec GKE Custom Role" \

--permissions="container.jobs.create,container.jobs.delete,container.namespaces.create,container.nodes.proxy,container.pods.getLogs" \

--project=$project 2>/dev/null

gcloud projects add-iam-policy-binding $project \

--member=serviceAccount:$SERVICE_ACCOUNT_EMAIL \

--role=projects/$project/roles/algosec.gke.custom.role 1>/dev/null

create_project_resources "$project" "$ORGANIZATION_ID"

done

cd ..

rm -rf prevasio-onboarding

#----------------------------------------------------

# Activate service account

gcloud config set account $SERVICE_ACCOUNT_EMAIL

gcloud auth activate-service-account --project=$PROJECT_ID --key-file $SERVICE_ACCOUNT_NAME.json

# Send service-account details to Algosec Cloud

response=$(curl -X POST "$ALGOSEC_CLOUD_ONBOARDING_URL" \

-H "Content-Type: application/json" \

-H "Accept: application/json" \

-H "Authorization: $TOKEN" \

--silent \

-d '{ "organization_id":"'$ACCESS_ORGANIZATION_ID'", "data":"'$SERVICE_ACCOUNT_KEY'", "project_id":"'$PROJECT_ID'", "event": { "RequestType": "Create" } }')

status=$(echo $response | jq -r '.initialOnboardResult' | jq -r '.status')

message=$(echo $response | jq -r '.initialOnboardResult' | jq -r '.message')

if [ "$status" == 200 ]; then

echo "The onboarding process is finished: $message"

echo "Press CTRL+D to close the terminal session"

else

echo "ERROR: The onboarding process has failed: $message"

fi -

Paste and run the script in your alternative shell to complete onboarding the subscription(s).

The ACE Onboarding Management page displays the newly onboarded resources.

Note: It may take up to an hour for Google Cloud to sync with ACE.

-

-

Requires ACE read-only access

You can onboard Google Cloud Projects without using a script if your system does not support using scripts.

Do the following:

-

Log in to the https://console.cloud.google.com/ as a user with the following permissions:

- Organization Role Administrator or IAM Role Administrator

-

Organization Policy Administrator

-

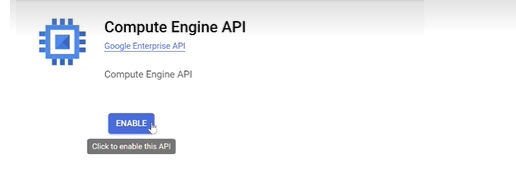

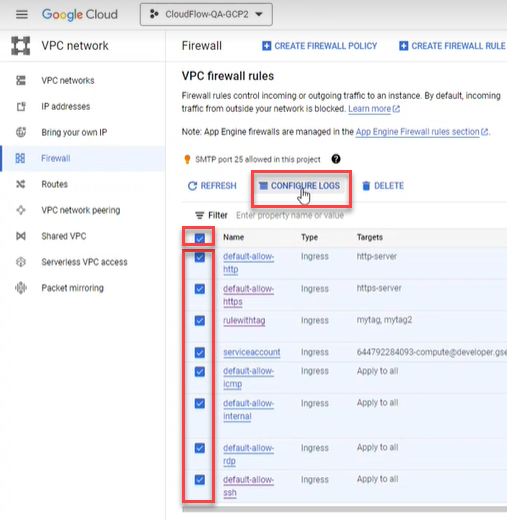

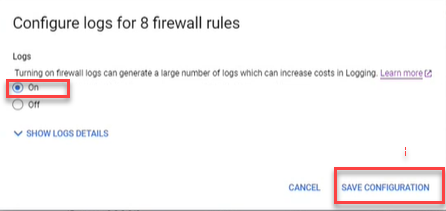

Enable the required APIs for your project:

-

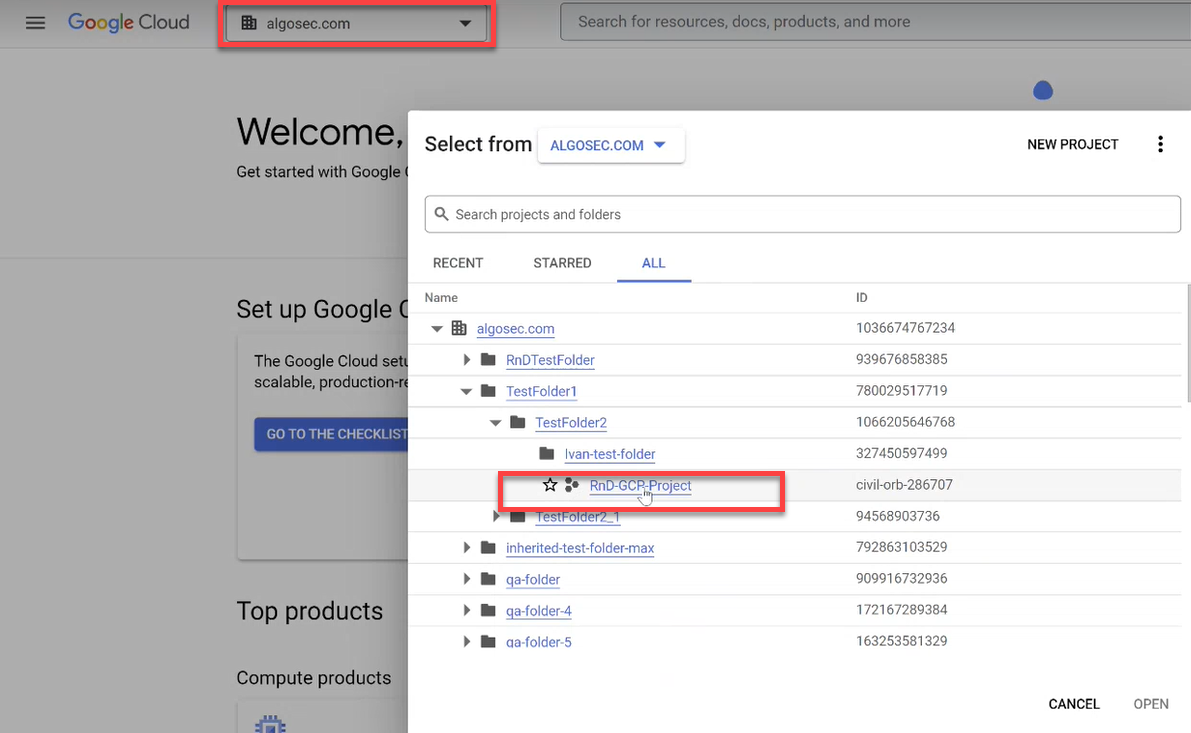

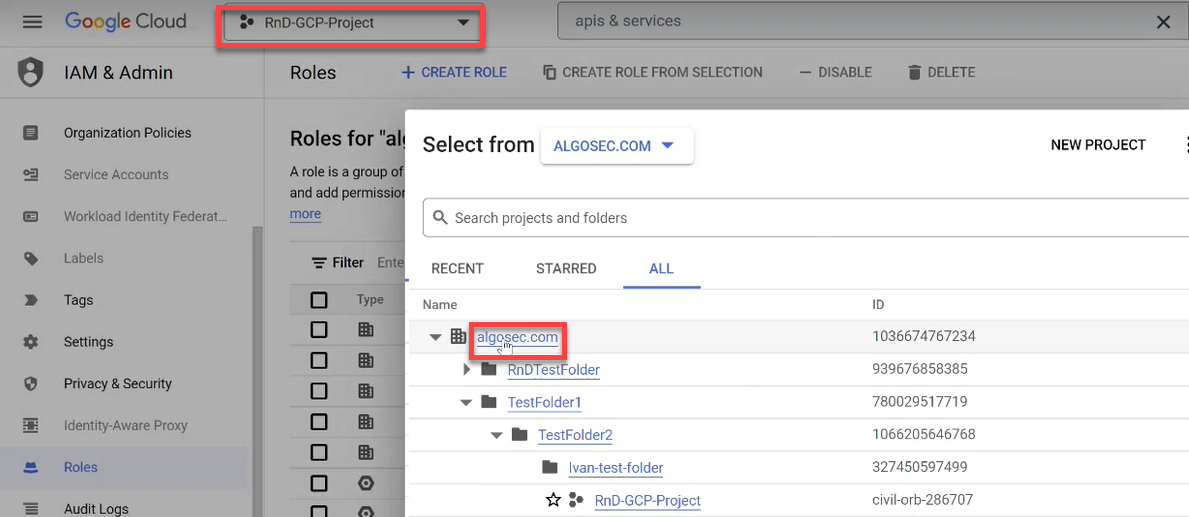

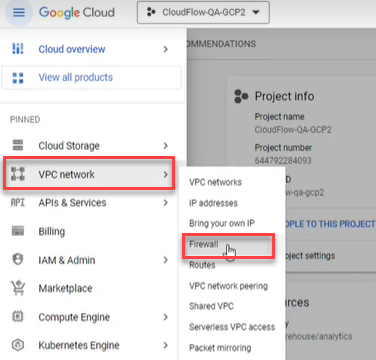

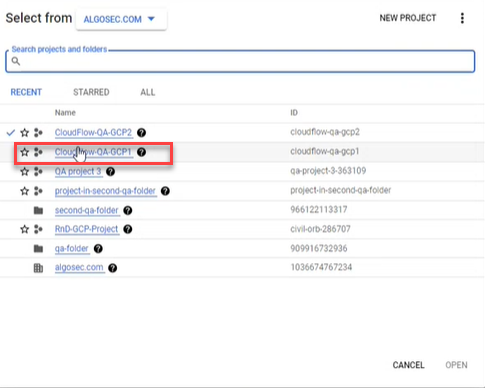

Click the project dropdown from the menu and choose your current project in the popup window that opens.

In this example, the project is called RnD-GCP-Project.

-

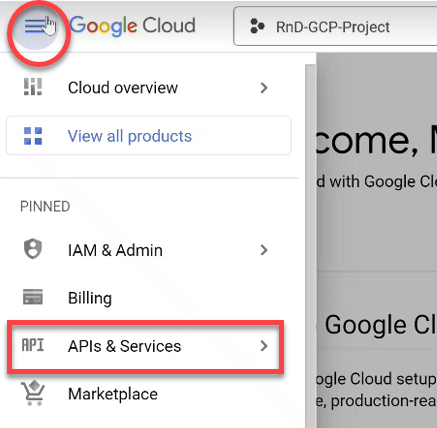

Click APIs & Services in the navigation menu.

The APIs & Services page opens.

-

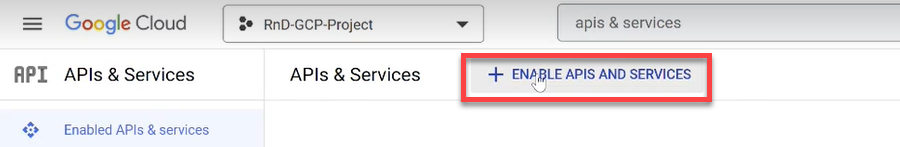

Click +ENABLE APIS & SERVICES at the top of the screen.

The Welcome to the API Library screen opens.

-

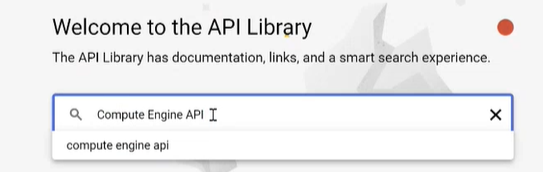

Search for Compute Engine API and make sure it is enabled.

-

Repeat the previous step for

-

Identity and Access Management (IAM) API

-

Cloud Storage API

-

Cloud Resource Manager API

-

Cloud Logging API

Note: For a details about required permissions see Permissions required for Google Cloud

-

-

-

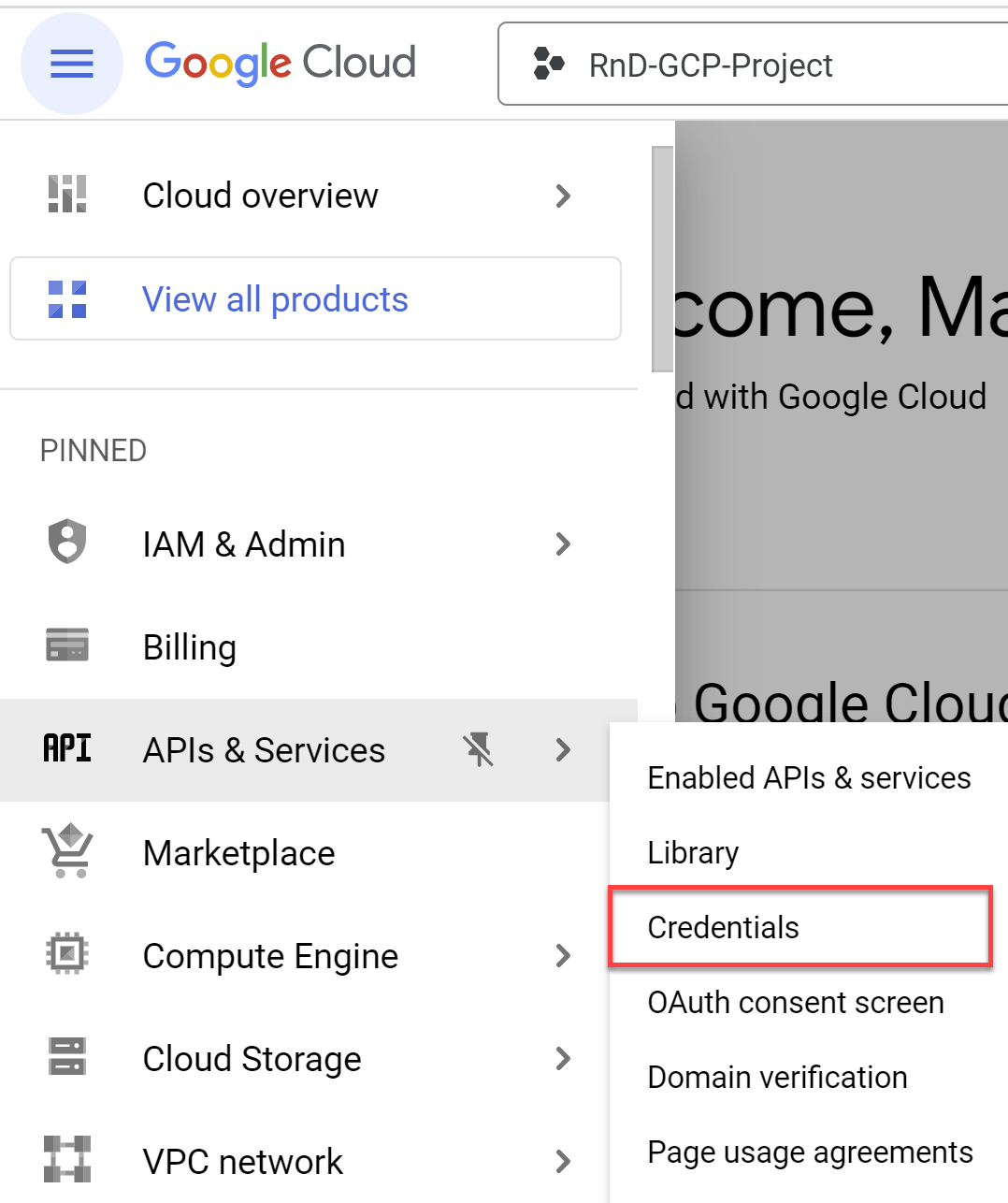

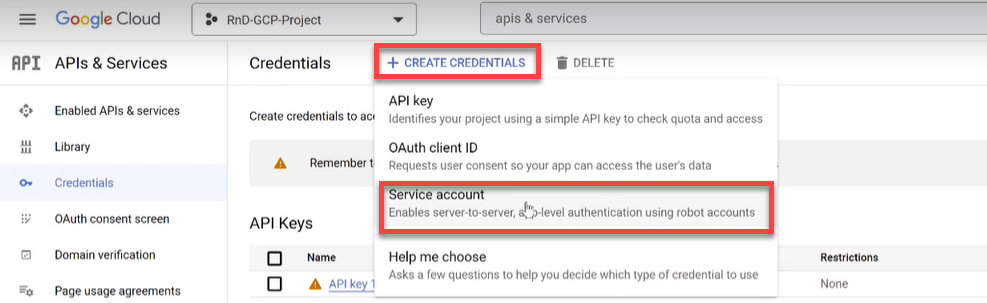

Add a Service Account:

-

Select APIs & Services > Credentials in the navigation menu.

The Credentials screen opens.

-

Click on +CREATE CREDENTIALS and select Service account from the dropdown list.

-

In the Service account name field enter "ACE-account" and click CREATE AND CONTINUE.

-

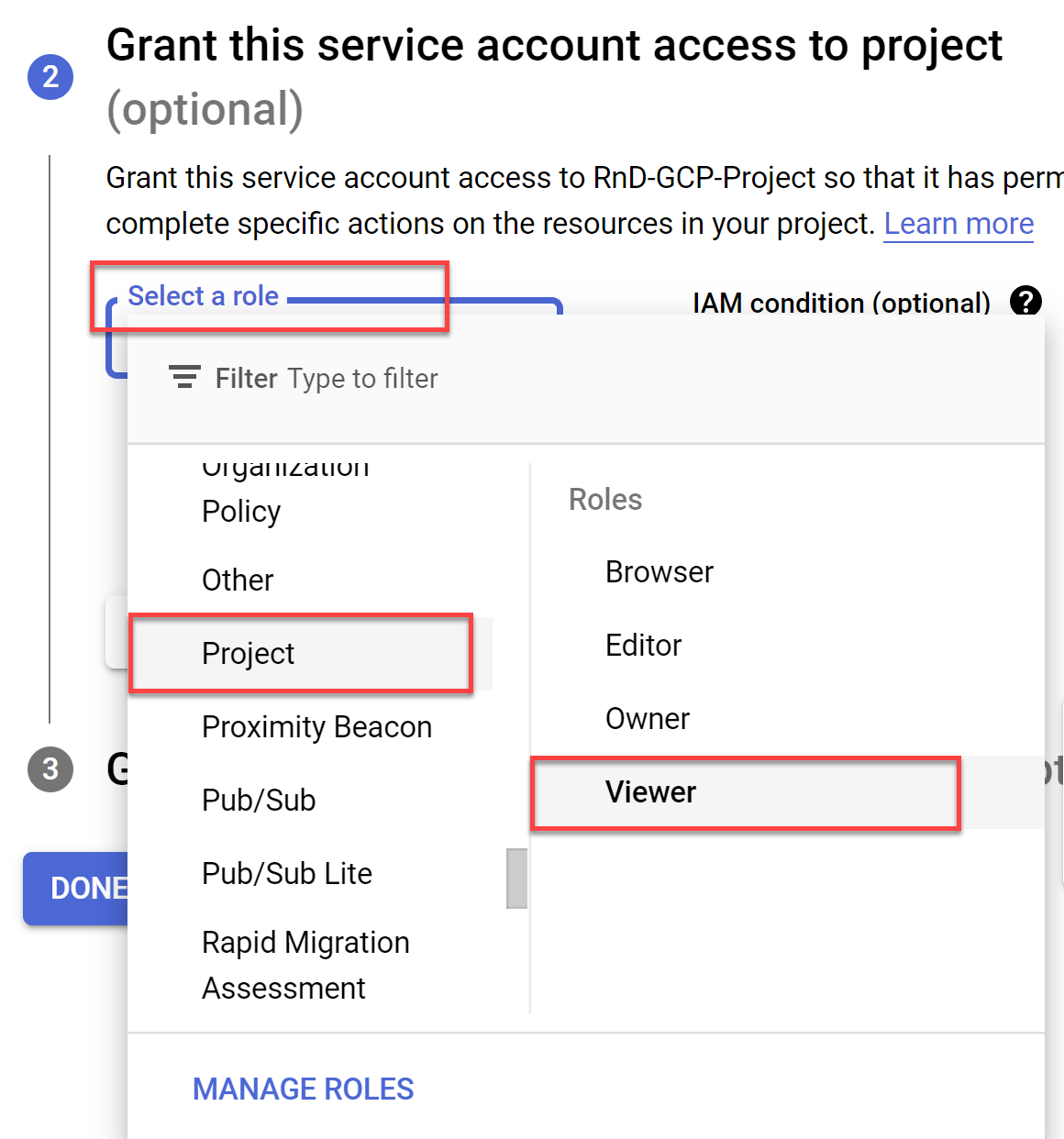

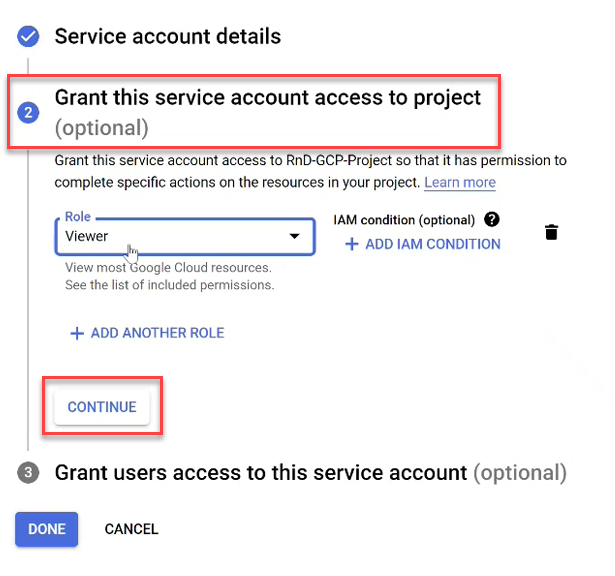

Click the Select a role dropdown and scroll to Project with the role Viewer.

Note: For the list of the Viewer role permissions see the Roles ID column in Permissions required for Google Cloud .

-

Click CONTINUE to save your changes. (Do not click DONE until you see a checkmark next to Grant this service account access to project).

-

Click DONE.

-

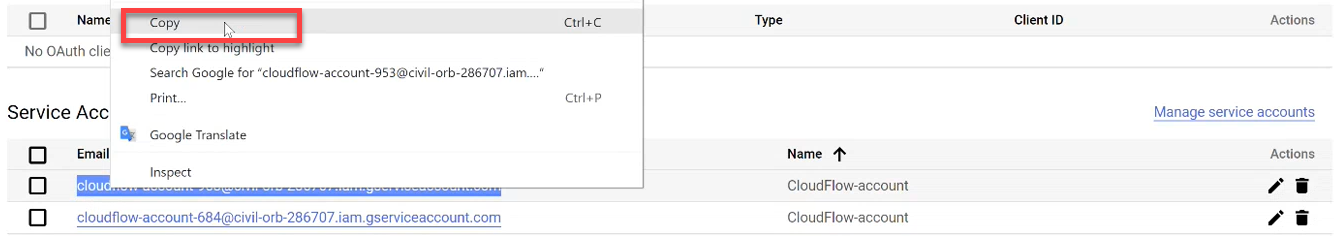

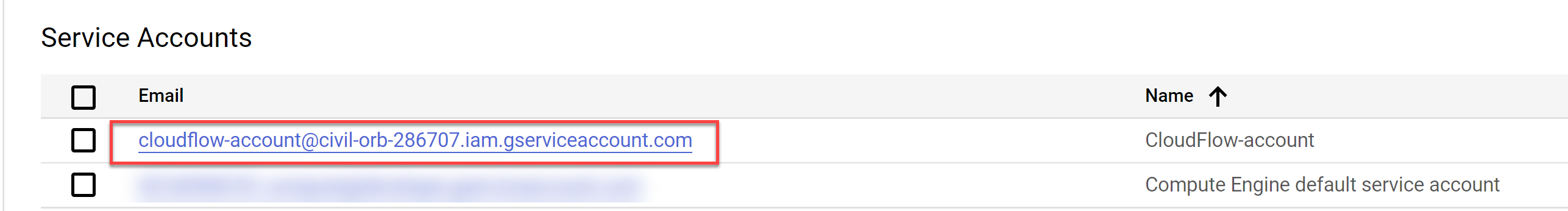

Copy the created Service Account email to use it later in Step 6b.

-

-

Export the Service Account credentials as a JSON file:

-

On the Credentials page, click on the Service Account email link for the ACE account.

The ACE account page opens.

-

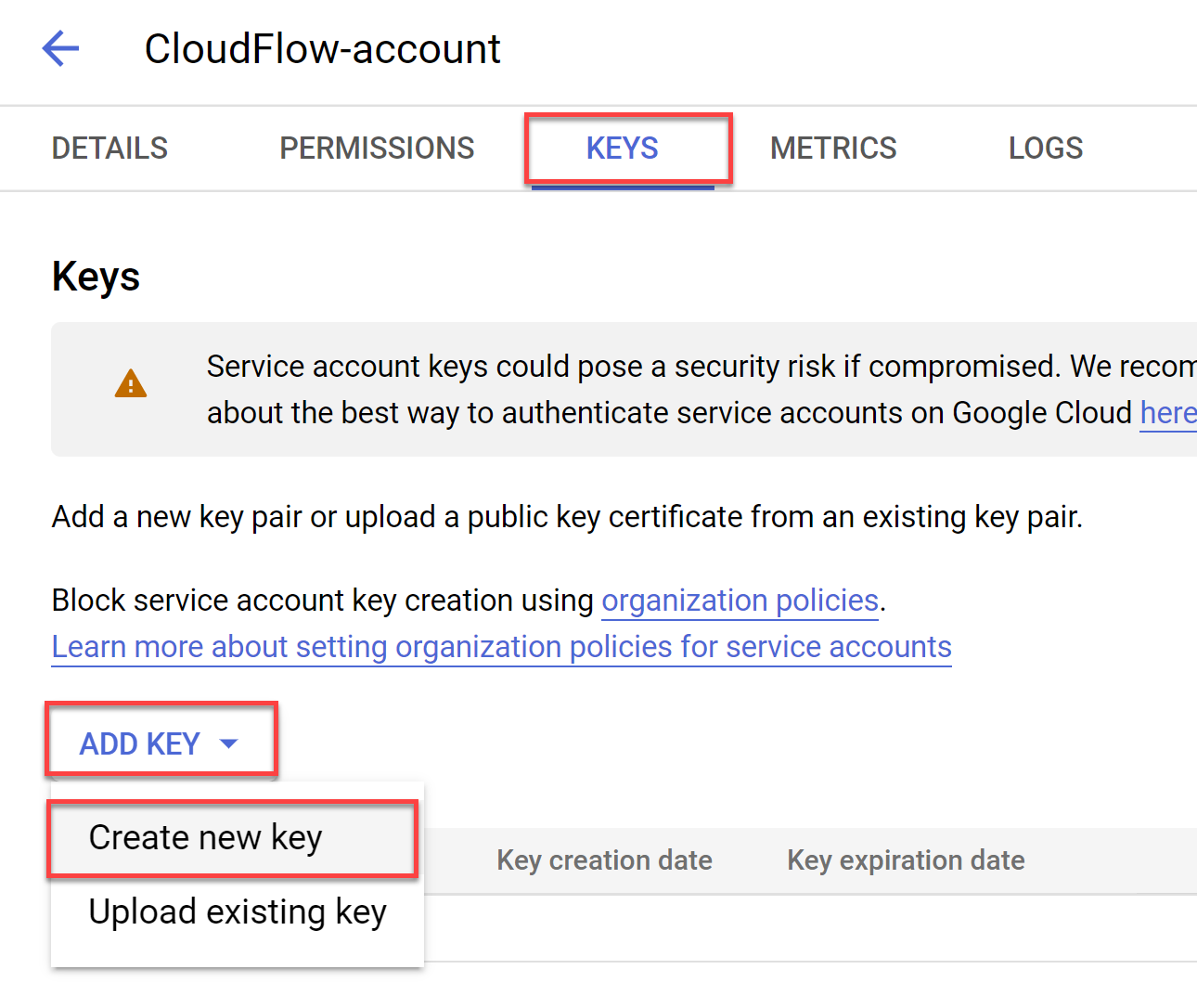

Select the KEYS tab. Click ADD KEY, and choose Create new key.

The Create Private Key for ACE-account dialog appears.

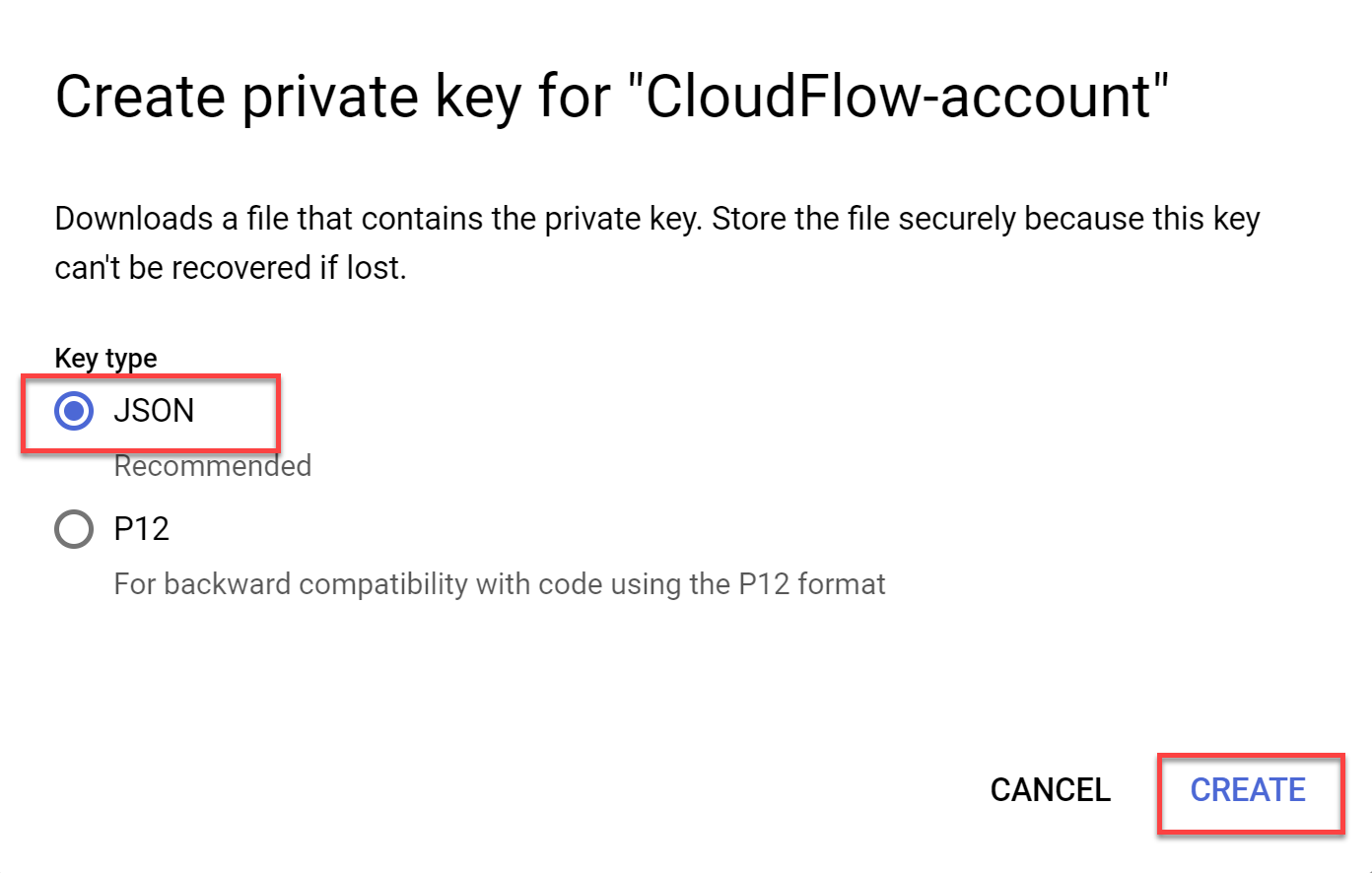

-

Select JSON for Key type and then click CREATE.

The JSON file is downloaded to your computer. You will use this file in Step 8.

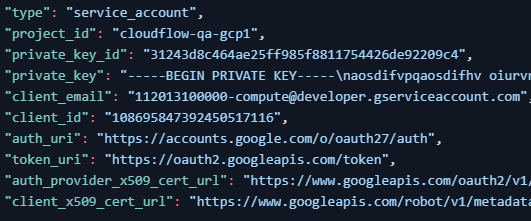

This is an example of the Service Account credentials in a JSON file:

Note: Your browser may block downloading the file. See the URL box for notifications.

-

-

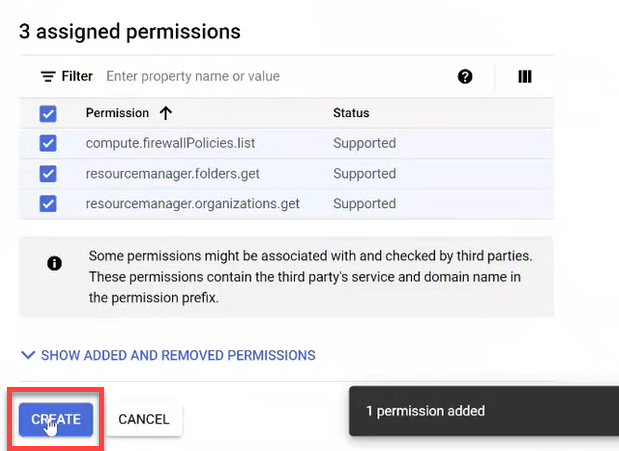

Create custom role with required permissions for your organization. See List of roles added to the selected target

-

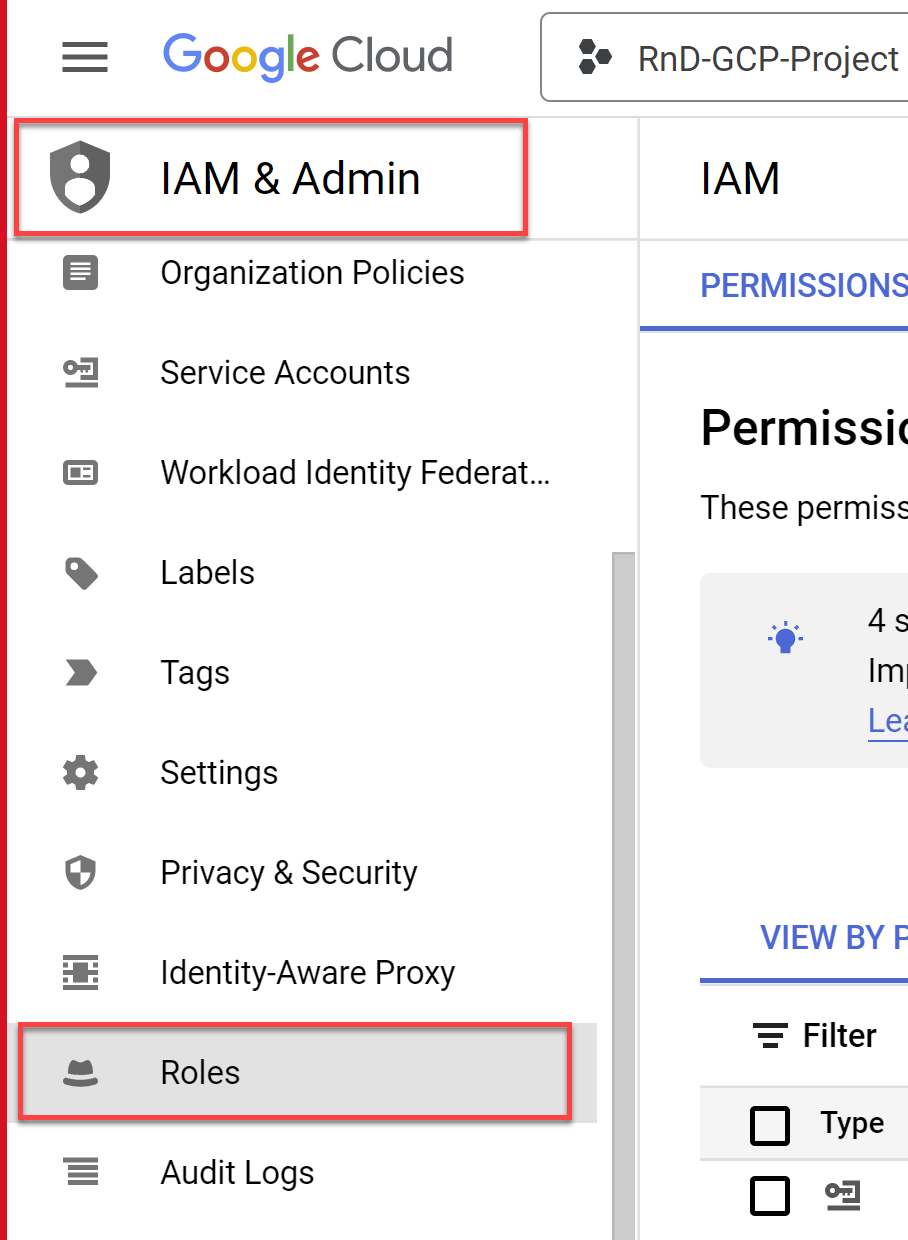

Select Roles located under IAM & Admin in the navigation menu.

-

Click the project dropdown from the menu and choose your organization in the popup window that opens.

-

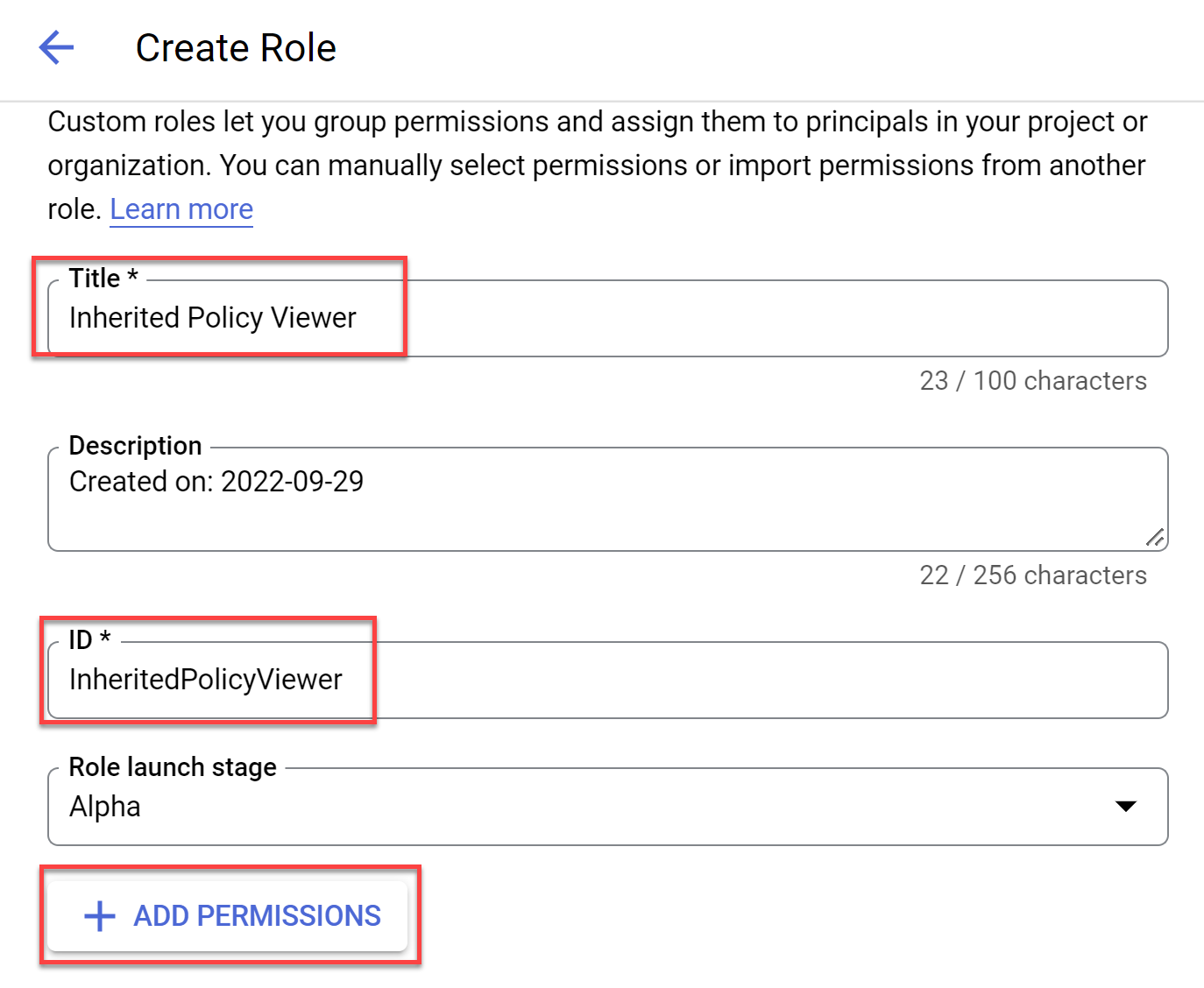

Click +CREATE ROLE. The Create Role screen opens.

-

Enter the role Title and ID and then click +ADD PERMISSIONS.

In the example below, Title is "Inherited Policy Viewer" and ID is "InheritedPolicyViewer".

The Add permissions window opens.

-

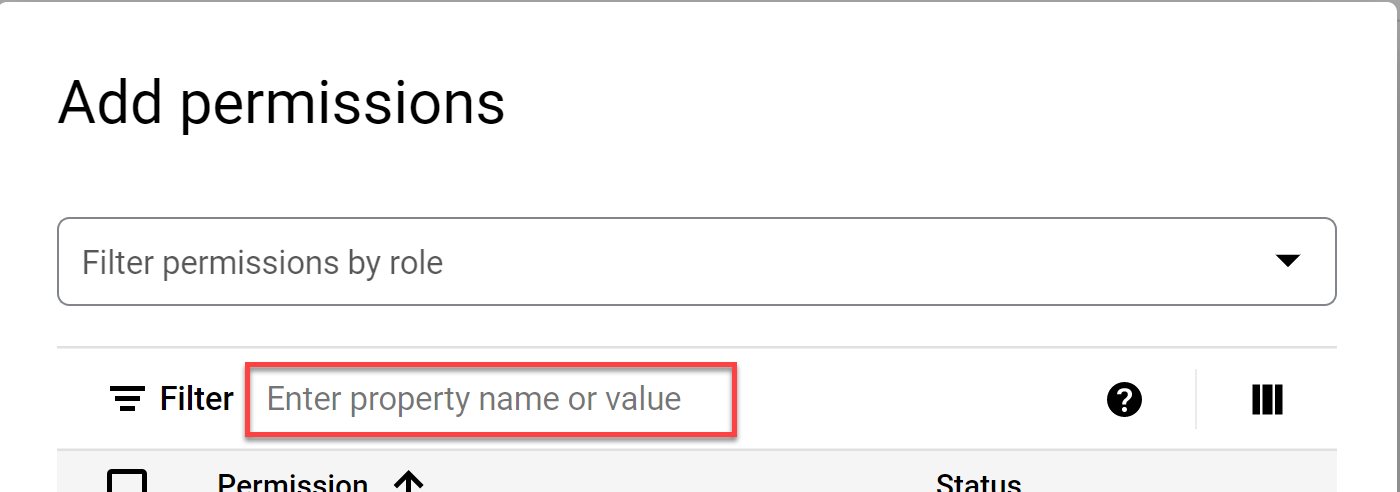

In the Enter property name or value field enter compute.firewallPolicies.list.

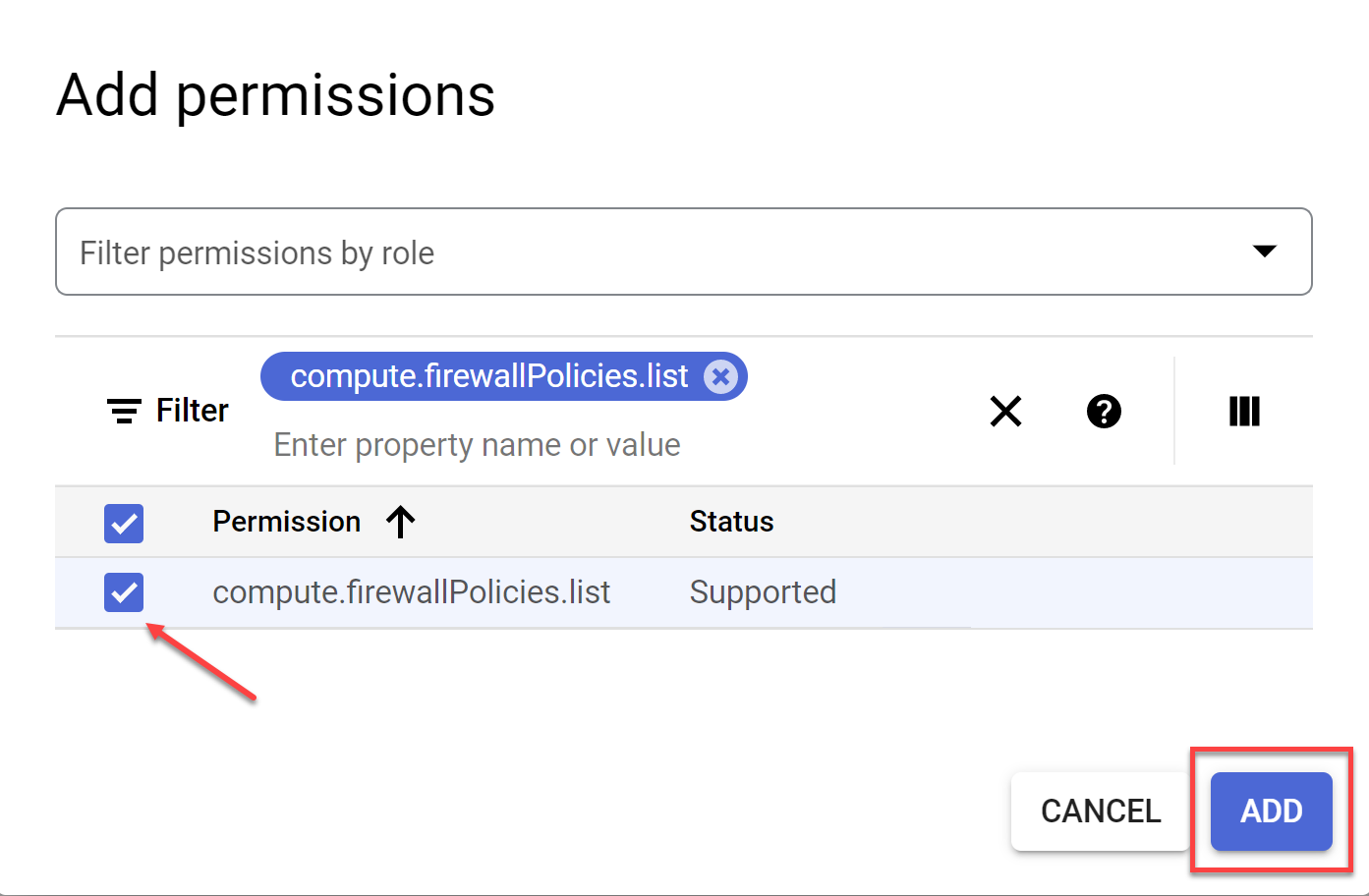

-

Choose the matching string from the list, click the checkbox to activate it and press ADD.

-

Repeat steps d through f for

-

resourcemanager.folders.get

-

resourcemanager.organizations.get

-

storage.buckets.list

Note: For a details about required permissions see Permissions required for Google Cloud

-

-

Click CREATE to complete the role creation process.

-

-

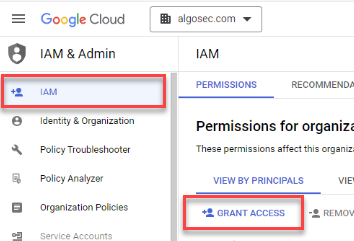

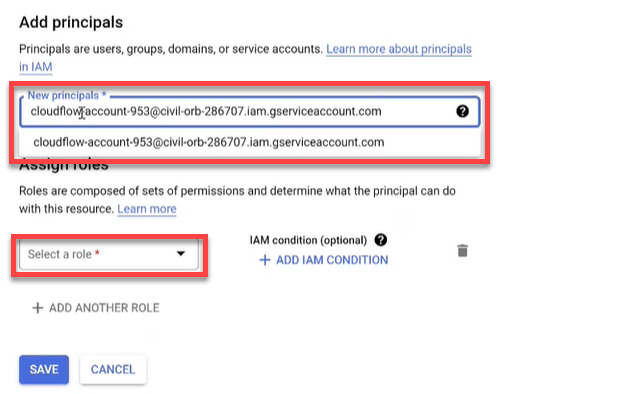

Assign the required role to the Service Account:

-

Select IAM located under IAM & Admin in the navigation menu and then click GRANT ACCESS or ADD.

The Add principals screen opens.

-

Paste the Service Account email you copied from Step 3g into the New principals field and then choose it from the dropdown.

-

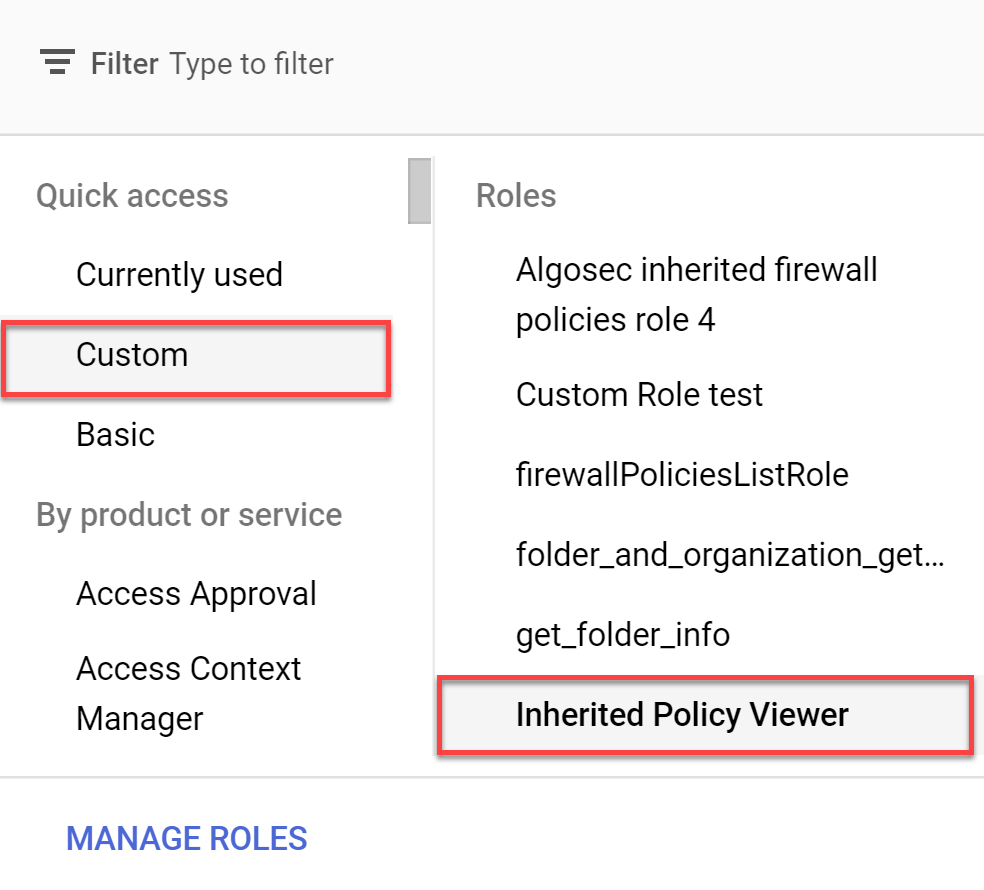

Click Select a role and choose Custom from the Quick access.

-

In the Roles list on the right side of the dialog window, click on the inherited role name you created in Step 5d.

-

Click SAVE. The policy is updated.

-

-

Open the Onboard Google Cloud Resources API page.

-

Enter your values into the request:

-

In the request body, provide the values Organization ID (organization_id) and the Service Account Key (data).

-

The Service Account Key is contained in the JSON file downloaded earlier (see Step 4c).

-

-

Run the API call to complete the onboarding process.

-

Manually onboard CD mitigation (Optional):

Manually onboard CD mitigation (Optional):

-

Prerequisites

Before deploying the solution in Google Cloud Platform, ensure the following prerequisites are completed.

Do the following:

-

Open theGoogle Cloud Console.

-

Sign in with your Google account.

-

In the top navigation bar, select your GCP project.

-

Make note of your Project ID and Project Number.

-

In the top-right corner of the console, click Activate Cloud Shell

-

A terminal window will open at the bottom of the screen, already authenticated.

-

Confirm your active project:

Copygcloud config list project

-

-

Set the GCP Project and Prepare Required Values

Ensure the correct project is selected in your Cloud Shell session and gather all required values before deploying.

Do the following:

-

If needed, set the correct project:

Copygcloud config set project <your-project-id> -

Have the following required values ready:

-

To generate the

ADDITIONALSvalue:Create the following JSON:

Copy{"tenantId":"ALGOSEC_TENANT_ID","clientId":"ALGOSEC_CLIENT_ID","clientSecret":"ALGOSEC_CLIENT_SECRET"}Convert the JSON to a plain string.

Convert the string to UTF-8 bytes (most text editors or tools do this automatically).

Base64 encode the UTF-8 byte string using a tool or terminal command.

Example (bash):

CopytenantId="your-tenant-id"

clientId="your-client-id"

clientSecret="your-client-secret"

json=$(printf '{"tenantId":"%s","clientId":"%s","clientSecret":"%s"}' "$tenantId" "$clientId" "$clientSecret")

encoded=$(echo -n "$json" | base64)

echo "$encoded" -

Export following variables:

Copyexport PROJECT_ID=<your-gcp-project-id>

export HASH=<your-unique-id>

export PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")

export SOURCES_URL="<GENERAL_ENV_URL>/prevasio/gcp-application"

export ALGOSEC_TENANT_ID="<Your Tenant ID>"

export SERVICE_ACCOUNT_EMAIL=<Your SERVICE_ACCOUNT_EMAIL>

export SERVICE_ACCOUNT_NAME=<Your SERVICE_ACCOUNT_NAME>

export ADDITIONALS=<ADDITIONALS value>

export ORG_ID=<GCP ORG ID>

export ALGOSEC_CLOUD_HOST=<GENERAL_ENV_URL>

export PREVASIO_HOST=<GENERAL_ENV_URL>

export NOTE_ID="prevasio-$HASH-note"

export ATTESTOR_ID="prevasio-$HASH-attestor"

export BINAUTHZ_SA="service-${PROJECT_NUMBER}@gcp-sa-binaryauthorization.iam.gserviceaccount.com"

export REGION=<your region for ex. us-east1>

-

-

Create Binary Authorization Attestor

This step configures a Binary Authorization attestor for trusted image signing, using a KMS signing key and GCP IAM roles.

Do the following:

-

Set the required environment variables:

Copyexport NOTE_ID="prevasio-$HASH-note"

export ATTESTOR_ID="prevasio-$HASH-attestor"

export BINAUTHZ_SA="service-${PROJECT_NUMBER}@gcp-sa-binaryauthorization.iam.gserviceaccount.com" -

Create a Container Analysis note:

Copycat <<EOF > attestor_note.json

{

"attestation": {

"hint": {

"human_readable_name": "Prevasio attestation authority"

}

}

}

EOF

curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

--data-binary @attestor_note.json \

"https://containeranalysis.googleapis.com/v1/projects/${PROJECT_ID}/notes/?noteId=${NOTE_ID}"

rm attestor_note.json -

Create the attestor using the note you just defined:

Copygcloud container binauthz attestors create $ATTESTOR_ID \

--attestation-authority-note=$NOTE_ID \

--attestation-authority-note-project=$PROJECT_ID \

--project=$PROJECT_ID -

Grant IAM access for the attestor to view occurrences:

Copycat <<EOF > iam_request.json

{

"resource": "projects/${PROJECT_ID}/notes/${NOTE_ID}",

"policy": {

"bindings": [

{

"role": "roles/containeranalysis.notes.occurrences.viewer",

"members": [

"serviceAccount:${BINAUTHZ_SA}"

]

}

]

}

}

EOF

curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

--data-binary @iam_request.json \

"https://containeranalysis.googleapis.com/v1/projects/${PROJECT_ID}/notes/${NOTE_ID}:setIamPolicy"

rm iam_request.json -

Create a KMS keyring and signing key (idempotent):

Copygcloud kms keyrings create prevasio-attestor-keyring \

--location=global --project=$PROJECT_ID --quiet || true

gcloud kms keys create prevasio-attestor-key \

--keyring=prevasio-attestor-keyring \

--location=global \

--purpose=asymmetric-signing \

--default-algorithm=ec-sign-p256-sha256 \

--project=$PROJECT_ID --quiet || true -

Grant the Compute Engine default service account permission to sign with the key:

Copygcloud kms keys add-iam-policy-binding prevasio-attestor-key \

--keyring=prevasio-attestor-keyring \

--location=global \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/cloudkms.signer" \

--project=$PROJECT_ID -

Attach the KMS public key to the Binary Authorization attestor:

Copygcloud beta container binauthz attestors public-keys add \

--attestor=$ATTESTOR_ID \

--keyversion-project=$PROJECT_ID \

--keyversion-location=global \

--keyversion-keyring=prevasio-attestor-keyring \

--keyversion-key=prevasio-attestor-key \

--keyversion=1 \

--project=$PROJECT_ID

-

-

Update Binary Authorization Policy

-

export the current policy

gcloud container binauthz policy export --project=$PROJECT_ID --quiet --verbosity=error > ./policy.yaml -

extract current attestors

ATTESTORS=($(awk '/-/{if ($1=="requireAttestationsBy:") print $NF}' ./policy.yaml)) -

add your new attestor

ATTESTORS+=("projects/$PROJECT_ID/attestors/$ATTESTOR_ID") -

create a new policy file with updated attestors

Copyecho -e "defaultAdmissionRule:\n enforcementMode: ENFORCED_BLOCK_AND_AUDIT_LOG\n evaluationMode: REQUIRE_ATTESTATION\n requireAttestationsBy:" > ./updated_policy.yaml

for attestor in "${ATTESTORS[@]}"; do echo " - $attestor"; done >> ./updated_policy.yaml

echo "globalPolicyEvaluationMode: ENABLE" >> ./updated_policy.yaml -

import the updated policy

gcloud container binauthz policy import ./updated_policy.yaml --project=$PROJECT_ID --quiet --verbosity=error -

clean up temporary files

rm -f ./policy.yaml ./updated_policy.yaml

-

-

Prepare Working Directory and Download Resources

Set up a clean local directory for the deployment and download required application resources.

Do the following:

-

Create and enter a clean working directory:

Copymkdir -p prevasio-onboarding && rm -rf prevasio-onboarding/*

cd prevasio-onboardingThis will:

- Create a folder named

prevasio-onboarding - Delete any existing content

- Change into that directory

- Create a folder named

-

Download application resources based on your region <SOURCE_URL>:

- US: https://us.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- EU: https://eu.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- ANZ: https://anz.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- IND: https://ind.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- ME: https://me.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- UAE: https://uae.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- SGP: https://sgp.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

Or use the following Bash script:

Copywget -O sources.zip "${SOURCES_URL}?tenant_id=${ALGOSEC_TENANT_ID}"

unzip sources.zipFor <SOURCE_URL> see HERE.

-

Enable the required GCP APIs for each project you plan to onboard:

Copygcloud services enable \

artifactregistry.googleapis.com \

cloudfunctions.googleapis.com \

cloudkms.googleapis.com \

cloudscheduler.googleapis.com \

compute.googleapis.com \

container.googleapis.com \

pubsub.googleapis.com \

secretmanager.googleapis.com \

binaryauthorization.googleapis.com \

cloudbuild.googleapis.com \

containeranalysis.googleapis.com \

run.googleapis.com

-

-

Get Project Number and Configure Secrets

Retrieve your GCP project number and create secrets to securely store configuration values, granting access to the default compute service account.

Do the following:

-

Set your project ID and retrieve your project number:

CopyPROJECT_ID=<your-project-id>

PROJECT_NUMBER=$(gcloud projects describe "$PROJECT_ID" --format="value(projectNumber)") -

Create a secret to store your organization ID:

Copyecho "$ORG_ID" | gcloud secrets create prevasio-$HASH-org-id --data-file=- --project=$PROJECT_ID -

Grant the compute engine service account access to the organization ID secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-org-id \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor" -

Create a secret to store the Prevasio host URL:

Copyecho "$PREVASIO_HOST" | gcloud secrets create prevasio-$HASH-host --data-file=- --project=$PROJECT_ID -

Grant the compute engine service account access to the Prevasio host secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-host \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor"

-

-

Create Additional Secrets and Grant Access

Store additional configuration parameters and the AlgoSec Cloud host value as GCP secrets, and assign access to the Compute Engine default service account.

Do the following:

-

Create a secret to store additional configuration parameters (Base64-encoded):

Copyecho "$ADDITIONALS" | gcloud secrets create prevasio-$HASH-additionals --data-file=- --project=$PROJECT_ID -

Grant access to the Compute Engine service account for the

additionalssecret:Copygcloud secrets add-iam-policy-binding prevasio-$HASH-additionals \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor" -

Create a secret to securely store the AlgoSec Cloud host value:

Copyecho "$ALGOSEC_CLOUD_HOST" | gcloud secrets create prevasio-$HASH-algosec-cloud-host --data-file=- --project=$PROJECT_ID -

Grant the Compute Engine service account permission to access the AlgoSec Cloud host secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-algosec-cloud-host \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor"

-

-

Deploy Cloud Functions

Deploy the required Cloud Functions using the Google Cloud CLI. These functions handle event forwarding and container scanning operations.

Do the following:

-

Deploy the Events Forwarder Function:

Copygcloud functions deploy prevasio-$HASH-events-forwarder \

--gen2 \

--set-env-vars=HASH=$HASH \

--set-secrets=PREVASIO_HOST=prevasio-$HASH-host:1,PREVASIO_ADDITIONALS=prevasio-$HASH-additionals:1,ORGANIZATION_ID=prevasio-$HASH-org-id:1,ALGOSEC_CLOUD_HOST=prevasio-$HASH-algosec-cloud-host:1 \

--region=$REGION \

--runtime=python310 \

--source=./function/events_forwarder \

--entry-point=forward_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=1 \

--memory=128Mi \

--project=$PROJECT_ID -

Deploy the Cloud Run Scanner Function:

Copygcloud functions deploy prevasio-$HASH-cloud-run-scanner \

--gen2 \

--region=$REGION \

--runtime=python310 \

--source=./function/cloud_run_scanner \

--entry-point=scan_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=1 \

--memory=256Mi \

--project=$PROJECT_ID

-

-

Create Cloud Scheduler Job and Trigger Scanner

Configure a recurring scan using Cloud Scheduler, and manually invoke the scanner once to verify it is operational.

Do the following:

-

Create a Cloud Scheduler job to invoke the scanner every 6 hours:

Copygcloud scheduler jobs create http prevasio-$HASH-cloud-run-scanner-scheduler \

--schedule="0 */6 * * *" \

--uri="https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner" \

--location=$REGION \

--oidc-service-account-email=${PROJECT_NUMBER}[email protected] -

Trigger the scanner function once manually to verify it's working:

Copycurl -X POST -H "Authorization: Bearer $(gcloud auth print-identity-token)" \

"https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner"

-

-

Create Pub/Sub Topic and Deploy Attestation Function

Create a Pub/Sub topic to manage image signing events and deploy a function to handle attestation creation for scanned images.

Do the following:

-

Create a Pub/Sub topic for images that need attestation:

Copygcloud pubsub topics create prevasio-$HASH-images-to-sign --project=$PROJECT_ID -

Grant publishing rights to the Compute Engine service account:

Copygcloud pubsub topics add-iam-policy-binding prevasio-$HASH-images-to-sign \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/pubsub.publisher" -

Deploy the image attestation creator Cloud Function:

Copygcloud functions deploy prevasio-$HASH-image-attestation-creator \

--gen2 \

--set-env-vars=HASH=$HASH \

--region=$REGION \

--runtime=python310 \

--source=./function/image_attestation_creator \

--entry-point=creator_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=10 \

--memory=128Mi \

--project=$PROJECT_ID -

Create a Pub/Sub subscription that triggers the attestation function:

Copygcloud pubsub subscriptions create prevasio-$HASH-image-attestation-creator-subscription \

--topic=projects/$PROJECT_ID/topics/prevasio-$HASH-images-to-sign \

--expiration-period=never \

--push-endpoint=https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-image-attestation-creator \

--push-auth-service-account=${PROJECT_NUMBER}[email protected] \

--ack-deadline=65 \

--message-retention-duration=10m \

--project=$PROJECT_ID

-

-

Create and Run the Image Publishing Script

Use this script to scan your Artifact Registry for container images and publish them to the Pub/Sub topic for signing.

Do the following:

-

Run the following from Cloud Shell or your terminal:

CopyPROJECT_ID=$PROJECT_ID

HASH=$HASH

for REGION in $(gcloud compute regions list --format="value(name)" --project=$PROJECT_ID); do

for REPO in $(gcloud artifacts repositories list \

--location=$REGION \

--project=$PROJECT_ID \

--format="value(REPOSITORY)" 2>/dev/null); do

IMAGE_PATH="${REGION}-docker.pkg.dev/${PROJECT_ID}/${REPO}"

for IMAGE in $(gcloud artifacts docker images list $IMAGE_PATH \

--project=$PROJECT_ID \

--format="value[separator='@'](IMAGE,DIGEST)" 2>/dev/null); do

echo "Publishing image: $IMAGE"

gcloud pubsub topics publish prevasio-$HASH-images-to-sign \

--message="$IMAGE" \

--project=$PROJECT_ID > /dev/null

done

done

done

echo "Done publishing image events."

-

-

Create GCR Topic and Event Subscription

Ensure a GCR Pub/Sub topic exists for container image activity, and subscribe to it with the event forwarder Cloud Function.

Do the following:

-

Check if a GCR topic exists; if not, create it:

Copygcloud pubsub topics list --project=$PROJECT_ID --format="value(name)" | grep "/gcr" || \

gcloud pubsub topics create gcr --project=$PROJECT_ID -

Create a subscription that pushes GCR event messages to the events forwarder function:

Copygcloud pubsub subscriptions create prevasio-$HASH-event-subscription \

--topic=projects/$PROJECT_ID/topics/gcr \

--expiration-period=never \

--push-endpoint=https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-events-forwarder \

--push-auth-service-account=${PROJECT_NUMBER}[email protected] \

--ack-deadline=65 \

--message-retention-duration=10m \

--project=$PROJECT_ID

-

-

Pub/Sub Subscription Check (optional)

Note: This step is optional, but it’s helpful for verifying that the subscription is configured correctly. If the values are incorrect, you may not receive notifications as expected.

-

Describe a Pub/Sub Subscription

Copygcloud pubsub subscriptions describe prevasio-$HASH-event-subscription --project=$PROJECT_IDThis command retrieves and displays the details of the Pub/Sub subscription named prevasio-$HASH-event-subscription in the specified Google Cloud project. It is useful for verifying the configuration, status, and properties of the subscription, such as its topic, push/pull settings, and message retention policies.

-

Update a Pub/Sub Subscription to Use a Push Endpoint

Copygcloud pubsub subscriptions update prevasio-$HASH-event-subscription \

--push-endpoint=https://$REGION-project-in-second-qa-folder.cloudfunctions.net/prevasio-$HASH-events-forwarder \

--push-auth-service-account=$PROJECT_NUMBER[email protected] \

--project=$PROJECT_IDThis command updates the Pub/Sub subscription named prevasio-$HASH-event-subscription in your specified Google Cloud project. It sets the subscription to push messages to a specific Cloud Function endpoint (--push-endpoint) and uses a designated service account (--push-auth-service-account) for authentication. This configuration enables secure, automated delivery of Pub/Sub messages to your Cloud Function for processing.

Note: If you accidentally provide incorrect values for the push endpoint or service account, you can simply rerun this command with the correct values to update the subscription settings.

-

-

Authenticate and Set GCP Service Account

Ensure the correct service account is authenticated and set as the active identity in your GCP environment before running further commands.

Do the following:

-

Login:

Copygcloud auth login -

Set the active account to your service account email:

Copygcloud iam service-accounts keys create $SERVICE_ACCOUNT_NAME.json --iam-account=$SERVICE_ACCOUNT_EMAIL --project=$PROJECT_ID -

Activate the service account using its key file:

Copygcloud auth activate-service-account $SERVICE_ACCOUNT_EMAIL --key-file=$SERVICE_ACCOUNT_NAME.json

-

-

You can use API calls to add a single Google Cloud project to ACE.

Note: Any changes to a project after onboarding are not synced with ACE. To delete one or more manually added projects, see Offboard Google Cloud projects from ACE.

Do the following:

-

Log in to the https://console.cloud.google.com/ as a user with the following permissions:

- Organization Role Administrator or IAM Role Administrator

-

Organization Policy Administrator

-

Enable the required APIs for your project:

-

Click the project dropdown from the menu and choose your current project in the popup window that opens.

In this example, the project is called RnD-GCP-Project.

-

Click APIs & Services in the navigation menu.

The APIs & Services page opens.

-

Click +ENABLE APIS & SERVICES at the top of the screen.

The Welcome to the API Library screen opens.

-

Search for Compute Engine API and make sure it is enabled.

-

Repeat the previous step for

-

Identity and Access Management (IAM) API

-

Cloud Storage API

-

Cloud Resource Manager API

-

Cloud Logging API

Note: For a details about required permissions see Permissions required for Google Cloud

-

-

-

Add a Service Account:

-

Select APIs & Services > Credentials in the navigation menu.

The Credentials screen opens.

-

Click on +CREATE CREDENTIALS and select Service account from the dropdown list.

-

In the Service account name field enter "ACE-account" and click CREATE AND CONTINUE.

-

Click the Select a role dropdown and scroll to Project with the role Viewer.

Note: For the list of the Viewer role permissions see the Roles ID column in Permissions required for Google Cloud .

-

Click CONTINUE to save your changes. (Do not click DONE until you see a checkmark next to Grant this service account access to project).

-

Click DONE.

-

Copy the created Service Account email to use it later in Step 6b.

-

-

Export the Service Account credentials as a JSON file:

-

On the Credentials page, click on the Service Account email link for the ACE account.

The ACE account page opens.

-

Select the KEYS tab. Click ADD KEY, and choose Create new key.

The Create Private Key for ACE-account dialog appears.

-

Select JSON for Key type and then click CREATE.

The JSON file is downloaded to your computer. You will use this file in Step 8.

This is an example of the Service Account credentials in a JSON file:

Note: Your browser may block downloading the file. See the URL box for notifications.

-

-

Create custom role with required permissions for your organization. See List of roles added to the selected target

-

Select Roles located under IAM & Admin in the navigation menu.

-

Click the project dropdown from the menu and choose your organization in the popup window that opens.

-

Click +CREATE ROLE. The Create Role screen opens.

-

Enter the role Title and ID and then click +ADD PERMISSIONS.

In the example below, Title is "Inherited Policy Viewer" and ID is "InheritedPolicyViewer".

The Add permissions window opens.

-

In the Enter property name or value field enter compute.firewallPolicies.list.

-

Choose the matching string from the list, click the checkbox to activate it and press ADD.

-

Repeat steps d through f for

-

resourcemanager.folders.get

-

resourcemanager.organizations.get

-

storage.buckets.list

Note: For a details about required permissions see Permissions required for Google Cloud

-

-

Click CREATE to complete the role creation process.

-

-

Assign the required role to the Service Account:

-

Select IAM located under IAM & Admin in the navigation menu and then click GRANT ACCESS or ADD.

The Add principals screen opens.

-

Paste the Service Account email you copied from Step 3g into the New principals field and then choose it from the dropdown.

-

Click Select a role and choose Custom from the Quick access.

-

In the Roles list on the right side of the dialog window, click on the inherited role name you created in Step 5d.

-

Click SAVE. The policy is updated.

-

-

Manually onboard CD mitigation (Optional):

Manually onboard CD mitigation (Optional):

-

Prerequisites

Before deploying the solution in Google Cloud Platform, ensure the following prerequisites are completed.

Do the following:

-

Open theGoogle Cloud Console.

-

Sign in with your Google account.

-

In the top navigation bar, select your GCP project.

-

Make note of your Project ID and Project Number.

-

In the top-right corner of the console, click Activate Cloud Shell

-

A terminal window will open at the bottom of the screen, already authenticated.

-

Confirm your active project:

Copygcloud config list project

-

-

Set the GCP Project and Prepare Required Values

Ensure the correct project is selected in your Cloud Shell session and gather all required values before deploying.

Do the following:

-

If needed, set the correct project:

Copygcloud config set project <your-project-id> -

Have the following required values ready:

-

To generate the

ADDITIONALSvalue:Create the following JSON:

Copy{"tenantId":"ALGOSEC_TENANT_ID","clientId":"ALGOSEC_CLIENT_ID","clientSecret":"ALGOSEC_CLIENT_SECRET"}Convert the JSON to a plain string.

Convert the string to UTF-8 bytes (most text editors or tools do this automatically).

Base64 encode the UTF-8 byte string using a tool or terminal command.

Example (bash):

CopytenantId="your-tenant-id"

clientId="your-client-id"

clientSecret="your-client-secret"

json=$(printf '{"tenantId":"%s","clientId":"%s","clientSecret":"%s"}' "$tenantId" "$clientId" "$clientSecret")

encoded=$(echo -n "$json" | base64)

echo "$encoded" -

Export following variables:

Copyexport PROJECT_ID=<your-gcp-project-id>

export HASH=<your-unique-id>

export PROJECT_NUMBER=$(gcloud projects describe $PROJECT_ID --format="value(projectNumber)")

export SOURCES_URL="<GENERAL_ENV_URL>/prevasio/gcp-application"

export ALGOSEC_TENANT_ID="<Your Tenant ID>"

export SERVICE_ACCOUNT_EMAIL=<Your SERVICE_ACCOUNT_EMAIL>

export SERVICE_ACCOUNT_NAME=<Your SERVICE_ACCOUNT_NAME>

export ADDITIONALS=<ADDITIONALS value>

export ORG_ID=<GCP ORG ID>

export ALGOSEC_CLOUD_HOST=<GENERAL_ENV_URL>

export PREVASIO_HOST=<GENERAL_ENV_URL>

export NOTE_ID="prevasio-$HASH-note"

export ATTESTOR_ID="prevasio-$HASH-attestor"

export BINAUTHZ_SA="service-${PROJECT_NUMBER}@gcp-sa-binaryauthorization.iam.gserviceaccount.com"

export REGION=<your region for ex. us-east1>

-

-

Create Binary Authorization Attestor

This step configures a Binary Authorization attestor for trusted image signing, using a KMS signing key and GCP IAM roles.

Do the following:

-

Set the required environment variables:

Copyexport NOTE_ID="prevasio-$HASH-note"

export ATTESTOR_ID="prevasio-$HASH-attestor"

export BINAUTHZ_SA="service-${PROJECT_NUMBER}@gcp-sa-binaryauthorization.iam.gserviceaccount.com" -

Create a Container Analysis note:

Copycat <<EOF > attestor_note.json

{

"attestation": {

"hint": {

"human_readable_name": "Prevasio attestation authority"

}

}

}

EOF

curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

--data-binary @attestor_note.json \

"https://containeranalysis.googleapis.com/v1/projects/${PROJECT_ID}/notes/?noteId=${NOTE_ID}"

rm attestor_note.json -

Create the attestor using the note you just defined:

Copygcloud container binauthz attestors create $ATTESTOR_ID \

--attestation-authority-note=$NOTE_ID \

--attestation-authority-note-project=$PROJECT_ID \

--project=$PROJECT_ID -

Grant IAM access for the attestor to view occurrences:

Copycat <<EOF > iam_request.json

{

"resource": "projects/${PROJECT_ID}/notes/${NOTE_ID}",

"policy": {

"bindings": [

{

"role": "roles/containeranalysis.notes.occurrences.viewer",

"members": [

"serviceAccount:${BINAUTHZ_SA}"

]

}

]

}

}

EOF

curl -s -X POST -H "Authorization: Bearer $(gcloud auth print-access-token)" \

-H "Content-Type: application/json" \

--data-binary @iam_request.json \

"https://containeranalysis.googleapis.com/v1/projects/${PROJECT_ID}/notes/${NOTE_ID}:setIamPolicy"

rm iam_request.json -

Create a KMS keyring and signing key (idempotent):

Copygcloud kms keyrings create prevasio-attestor-keyring \

--location=global --project=$PROJECT_ID --quiet || true

gcloud kms keys create prevasio-attestor-key \

--keyring=prevasio-attestor-keyring \

--location=global \

--purpose=asymmetric-signing \

--default-algorithm=ec-sign-p256-sha256 \

--project=$PROJECT_ID --quiet || true -

Grant the Compute Engine default service account permission to sign with the key:

Copygcloud kms keys add-iam-policy-binding prevasio-attestor-key \

--keyring=prevasio-attestor-keyring \

--location=global \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/cloudkms.signer" \

--project=$PROJECT_ID -

Attach the KMS public key to the Binary Authorization attestor:

Copygcloud beta container binauthz attestors public-keys add \

--attestor=$ATTESTOR_ID \

--keyversion-project=$PROJECT_ID \

--keyversion-location=global \

--keyversion-keyring=prevasio-attestor-keyring \

--keyversion-key=prevasio-attestor-key \

--keyversion=1 \

--project=$PROJECT_ID

-

-

Update Binary Authorization Policy

-

export the current policy

gcloud container binauthz policy export --project=$PROJECT_ID --quiet --verbosity=error > ./policy.yaml -

extract current attestors

ATTESTORS=($(awk '/-/{if ($1=="requireAttestationsBy:") print $NF}' ./policy.yaml)) -

add your new attestor

ATTESTORS+=("projects/$PROJECT_ID/attestors/$ATTESTOR_ID") -

create a new policy file with updated attestors

Copyecho -e "defaultAdmissionRule:\n enforcementMode: ENFORCED_BLOCK_AND_AUDIT_LOG\n evaluationMode: REQUIRE_ATTESTATION\n requireAttestationsBy:" > ./updated_policy.yaml

for attestor in "${ATTESTORS[@]}"; do echo " - $attestor"; done >> ./updated_policy.yaml

echo "globalPolicyEvaluationMode: ENABLE" >> ./updated_policy.yaml -

import the updated policy

gcloud container binauthz policy import ./updated_policy.yaml --project=$PROJECT_ID --quiet --verbosity=error -

clean up temporary files

rm -f ./policy.yaml ./updated_policy.yaml

-

-

Prepare Working Directory and Download Resources

Set up a clean local directory for the deployment and download required application resources.

Do the following:

-

Create and enter a clean working directory:

Copymkdir -p prevasio-onboarding && rm -rf prevasio-onboarding/*

cd prevasio-onboardingThis will:

- Create a folder named

prevasio-onboarding - Delete any existing content

- Change into that directory

- Create a folder named

-

Download application resources based on your region <SOURCE_URL>:

- US: https://us.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- EU: https://eu.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- ANZ: https://anz.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- IND: https://ind.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- ME: https://me.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- UAE: https://uae.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

- SGP: https://sgp.app.algosec.com/prevasio/gcp-application?tenant_id=${ALGOSEC_TENANT_ID}

Or use the following Bash script:

Copywget -O sources.zip "${SOURCES_URL}?tenant_id=${ALGOSEC_TENANT_ID}"

unzip sources.zipFor <SOURCE_URL> see HERE.

-

Enable the required GCP APIs for each project you plan to onboard:

Copygcloud services enable \

artifactregistry.googleapis.com \

cloudfunctions.googleapis.com \

cloudkms.googleapis.com \

cloudscheduler.googleapis.com \

compute.googleapis.com \

container.googleapis.com \

pubsub.googleapis.com \

secretmanager.googleapis.com \

binaryauthorization.googleapis.com \

cloudbuild.googleapis.com \

containeranalysis.googleapis.com \

run.googleapis.com

-

-

Get Project Number and Configure Secrets

Retrieve your GCP project number and create secrets to securely store configuration values, granting access to the default compute service account.

Do the following:

-

Set your project ID and retrieve your project number:

CopyPROJECT_ID=<your-project-id>

PROJECT_NUMBER=$(gcloud projects describe "$PROJECT_ID" --format="value(projectNumber)") -

Create a secret to store your organization ID:

Copyecho "$ORG_ID" | gcloud secrets create prevasio-$HASH-org-id --data-file=- --project=$PROJECT_ID -

Grant the compute engine service account access to the organization ID secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-org-id \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor" -

Create a secret to store the Prevasio host URL:

Copyecho "$PREVASIO_HOST" | gcloud secrets create prevasio-$HASH-host --data-file=- --project=$PROJECT_ID -

Grant the compute engine service account access to the Prevasio host secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-host \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor"

-

-

Create Additional Secrets and Grant Access

Store additional configuration parameters and the AlgoSec Cloud host value as GCP secrets, and assign access to the Compute Engine default service account.

Do the following:

-

Create a secret to store additional configuration parameters (Base64-encoded):

Copyecho "$ADDITIONALS" | gcloud secrets create prevasio-$HASH-additionals --data-file=- --project=$PROJECT_ID -

Grant access to the Compute Engine service account for the

additionalssecret:Copygcloud secrets add-iam-policy-binding prevasio-$HASH-additionals \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor" -

Create a secret to securely store the AlgoSec Cloud host value:

Copyecho "$ALGOSEC_CLOUD_HOST" | gcloud secrets create prevasio-$HASH-algosec-cloud-host --data-file=- --project=$PROJECT_ID -

Grant the Compute Engine service account permission to access the AlgoSec Cloud host secret:

Copygcloud secrets add-iam-policy-binding prevasio-$HASH-algosec-cloud-host \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/secretmanager.secretAccessor"

-

-

Deploy Cloud Functions

Deploy the required Cloud Functions using the Google Cloud CLI. These functions handle event forwarding and container scanning operations.

Do the following:

-

Deploy the Events Forwarder Function:

Copygcloud functions deploy prevasio-$HASH-events-forwarder \

--gen2 \

--set-env-vars=HASH=$HASH \

--set-secrets=PREVASIO_HOST=prevasio-$HASH-host:1,PREVASIO_ADDITIONALS=prevasio-$HASH-additionals:1,ORGANIZATION_ID=prevasio-$HASH-org-id:1,ALGOSEC_CLOUD_HOST=prevasio-$HASH-algosec-cloud-host:1 \

--region=$REGION \

--runtime=python310 \

--source=./function/events_forwarder \

--entry-point=forward_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=1 \

--memory=128Mi \

--project=$PROJECT_ID -

Deploy the Cloud Run Scanner Function:

Copygcloud functions deploy prevasio-$HASH-cloud-run-scanner \

--gen2 \

--region=$REGION \

--runtime=python310 \

--source=./function/cloud_run_scanner \

--entry-point=scan_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=1 \

--memory=256Mi \

--project=$PROJECT_ID

-

-

Create Cloud Scheduler Job and Trigger Scanner

Configure a recurring scan using Cloud Scheduler, and manually invoke the scanner once to verify it is operational.

Do the following:

-

Create a Cloud Scheduler job to invoke the scanner every 6 hours:

Copygcloud scheduler jobs create http prevasio-$HASH-cloud-run-scanner-scheduler \

--schedule="0 */6 * * *" \

--uri="https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner" \

--location=$REGION \

--oidc-service-account-email=${PROJECT_NUMBER}[email protected] -

Trigger the scanner function once manually to verify it's working:

Copycurl -X POST -H "Authorization: Bearer $(gcloud auth print-identity-token)" \

"https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-cloud-run-scanner"

-

-

Create Pub/Sub Topic and Deploy Attestation Function

Create a Pub/Sub topic to manage image signing events and deploy a function to handle attestation creation for scanned images.

Do the following:

-

Create a Pub/Sub topic for images that need attestation:

Copygcloud pubsub topics create prevasio-$HASH-images-to-sign --project=$PROJECT_ID -

Grant publishing rights to the Compute Engine service account:

Copygcloud pubsub topics add-iam-policy-binding prevasio-$HASH-images-to-sign \

--member="serviceAccount:${PROJECT_NUMBER}[email protected]" \

--role="roles/pubsub.publisher" -

Deploy the image attestation creator Cloud Function:

Copygcloud functions deploy prevasio-$HASH-image-attestation-creator \

--gen2 \

--set-env-vars=HASH=$HASH \

--region=$REGION \

--runtime=python310 \

--source=./function/image_attestation_creator \

--entry-point=creator_func \

--trigger-http \

--no-allow-unauthenticated \

--max-instances=10 \

--memory=128Mi \

--project=$PROJECT_ID -

Create a Pub/Sub subscription that triggers the attestation function:

Copygcloud pubsub subscriptions create prevasio-$HASH-image-attestation-creator-subscription \

--topic=projects/$PROJECT_ID/topics/prevasio-$HASH-images-to-sign \

--expiration-period=never \

--push-endpoint=https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-image-attestation-creator \

--push-auth-service-account=${PROJECT_NUMBER}[email protected] \

--ack-deadline=65 \

--message-retention-duration=10m \

--project=$PROJECT_ID

-

-

Create and Run the Image Publishing Script

Use this script to scan your Artifact Registry for container images and publish them to the Pub/Sub topic for signing.

Do the following:

-

Run the following from Cloud Shell or your terminal:

CopyPROJECT_ID=$PROJECT_ID

HASH=$HASH

for REGION in $(gcloud compute regions list --format="value(name)" --project=$PROJECT_ID); do

for REPO in $(gcloud artifacts repositories list \

--location=$REGION \

--project=$PROJECT_ID \

--format="value(REPOSITORY)" 2>/dev/null); do

IMAGE_PATH="${REGION}-docker.pkg.dev/${PROJECT_ID}/${REPO}"

for IMAGE in $(gcloud artifacts docker images list $IMAGE_PATH \

--project=$PROJECT_ID \

--format="value[separator='@'](IMAGE,DIGEST)" 2>/dev/null); do

echo "Publishing image: $IMAGE"

gcloud pubsub topics publish prevasio-$HASH-images-to-sign \

--message="$IMAGE" \

--project=$PROJECT_ID > /dev/null

done

done

done

echo "Done publishing image events."

-

-

Create GCR Topic and Event Subscription

Ensure a GCR Pub/Sub topic exists for container image activity, and subscribe to it with the event forwarder Cloud Function.

Do the following:

-

Check if a GCR topic exists; if not, create it:

Copygcloud pubsub topics list --project=$PROJECT_ID --format="value(name)" | grep "/gcr" || \

gcloud pubsub topics create gcr --project=$PROJECT_ID -

Create a subscription that pushes GCR event messages to the events forwarder function:

Copygcloud pubsub subscriptions create prevasio-$HASH-event-subscription \

--topic=projects/$PROJECT_ID/topics/gcr \

--expiration-period=never \

--push-endpoint=https://$REGION-$PROJECT_ID.cloudfunctions.net/prevasio-$HASH-events-forwarder \

--push-auth-service-account=${PROJECT_NUMBER}[email protected] \

--ack-deadline=65 \

--message-retention-duration=10m \

--project=$PROJECT_ID

-

-

Pub/Sub Subscription Check (optional)

Note: This step is optional, but it’s helpful for verifying that the subscription is configured correctly. If the values are incorrect, you may not receive notifications as expected.

-

Describe a Pub/Sub Subscription

Copygcloud pubsub subscriptions describe prevasio-$HASH-event-subscription --project=$PROJECT_IDThis command retrieves and displays the details of the Pub/Sub subscription named prevasio-$HASH-event-subscription in the specified Google Cloud project. It is useful for verifying the configuration, status, and properties of the subscription, such as its topic, push/pull settings, and message retention policies.

-

Update a Pub/Sub Subscription to Use a Push Endpoint

Copygcloud pubsub subscriptions update prevasio-$HASH-event-subscription \

--push-endpoint=https://$REGION-project-in-second-qa-folder.cloudfunctions.net/prevasio-$HASH-events-forwarder \

--push-auth-service-account=$PROJECT_NUMBER[email protected] \

--project=$PROJECT_IDThis command updates the Pub/Sub subscription named prevasio-$HASH-event-subscription in your specified Google Cloud project. It sets the subscription to push messages to a specific Cloud Function endpoint (--push-endpoint) and uses a designated service account (--push-auth-service-account) for authentication. This configuration enables secure, automated delivery of Pub/Sub messages to your Cloud Function for processing.

Note: If you accidentally provide incorrect values for the push endpoint or service account, you can simply rerun this command with the correct values to update the subscription settings.

-

-

Authenticate and Set GCP Service Account

Ensure the correct service account is authenticated and set as the active identity in your GCP environment before running further commands.

Do the following:

-

Login:

Copygcloud auth login -

Set the active account to your service account email:

Copygcloud iam service-accounts keys create $SERVICE_ACCOUNT_NAME.json --iam-account=$SERVICE_ACCOUNT_EMAIL --project=$PROJECT_ID -

Activate the service account using its key file:

Copygcloud auth activate-service-account $SERVICE_ACCOUNT_EMAIL --key-file=$SERVICE_ACCOUNT_NAME.json

-

-

-

Onboard the project via ACE API

Complete the onboarding process by sending an API request to register the new GCP project in the ACE platform.

Do the following:

-